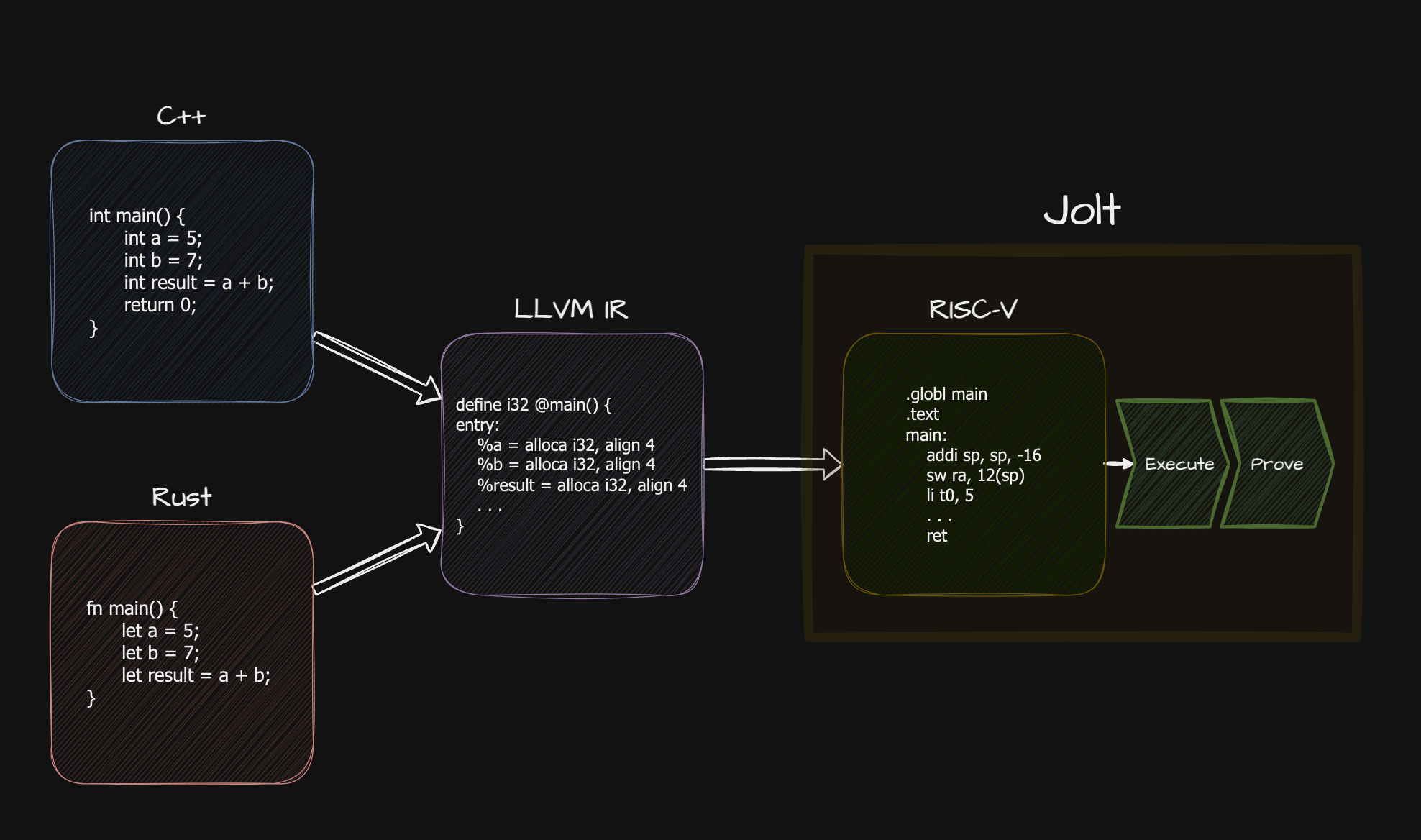

Jolt is a RISC-V zkVM uniquely designed around sumcheck and lookup arguments (Just One Lookup Table).

Jolt is fast (state-of-the-art performance on CPU) and relatively simple (thanks to its lookup-centric architecture).

This book has the following top-level sections:

- Intro (you are here)

- Usage (how to use Jolt in your applications)

- How it works (how the proof system and implementation work under the hood)

- Roadmap (what's next for Jolt)

Related reading

Papers

Jolt: SNARKs for Virtual Machines via Lookups

Arasu Arun, Srinath Setty, Justin Thaler

Twist and Shout: Faster memory checking arguments via one-hot addressing and increments

Srinath Setty, Justin Thaler

Unlocking the lookup singularity with Lasso

Srinath Setty, Justin Thaler, Riad Wahby

Blog posts

Initial launch:

Updates:

- Nov 12, 2024 blog video

- Aug 18, 2025 (Twist and Shout upgrade) blog

- Oct 15, 2025 (RV64 support) blog

Background

Credits

Jolt was initially forked from Srinath Setty's work on microsoft/Spartan, specifically the arkworks-rs/Spartan fork in order to use the excellent arkworks-rs field and curve implementations.

Jolt uses its own fork of arkworks-algebra with certain optimizations, including some described here.

Jolt's R1CS is also proven using a version of Spartan (forked from the microsoft/Spartan2 codebase) optimized for Jolt's uniform R1CS constraints.

Jolt uses Dory as its PCS, inspired by the Space and Time Labs implementation spaceandtimefdn/sxt-dory.

Usage

Quickstart

Installing

Start by installing the jolt command line tool.

cargo +nightly install --git https://github.com/a16z/jolt --force --bins jolt

Creating a Project

To create a project, use the jolt new command.

jolt new <PROJECT_NAME>

This will create a template Jolt project in the specified location. Make sure to cd into the project before running the next step.

cd <PROJECT_NAME>

Project Tour

The main folder contains the host, which is the code that can generate and verify proofs. Within this main folder, there is another package called guest which contains the Rust functions that we can prove from the host. For more information about these concepts refer to the guests and hosts section.

We'll start by taking a look at our guest. We can view the guest code in guest/src/lib.rs.

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] #![no_main] fn main() { #[jolt::provable] fn fib(n: u32) -> u128 { let mut a: u128 = 0; let mut b: u128 = 1; let mut sum: u128; for _ in 1..n { sum = a + b; a = b; b = sum; } b } }

As we can see, this implements a simple Fibonacci function called fib. All we need to do to make our function provable is add the jolt::provable macro above it.

Next let's take a look at the host code in src/main.rs.

pub fn main() { let (prove_fib, verify_fib) = guest::build_fib(); let (output, proof) = prove_fib(50); let is_valid = verify_fib(proof); println!("output: {output}"); println!("valid: {is_valid}"); }

This section simply imports guest::build_fib which is automatically generated by the jolt::provable macro, and returns functions for proving and verifying our function. The prove function takes the same inputs as the original fib function, but modifies the outputs to additionally return a proof. The verify function can then be used to check this proof, and return a boolean indicating its validity.

Running

Let's now run the host with cargo.

cargo run --release

This will compile the guest, perform some required preprocessing, and execute the host code which proves and verifies the 50th Fibonacci number. This preprocessing is run within the build_fib function and adds significant time to running the host, but only needs to be performed once. This means that we could use the prove method many times without rerunning build_fib. In the future we will support caching this across runs of the host.

Note that many logs in the example are logged at the info level. To see these logs, you can set the RUST_LOG environment variable before running the host:

RUST_LOG=info cargo run --release

If everything is working correctly, you should see output similar to the following:

...

2025-11-05T22:17:43.849979Z INFO jolt_core::zkvm: Proved in 2.6s (0.4 kHz / padded 0.8 kHz)

2025-11-05T22:17:43.923164Z INFO example: output: 12586269025

2025-11-05T22:17:43.923300Z INFO example: valid: true

Development

Rust

To build Jolt from source, you will need Rust:

curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh- Rustup should automatically install the Rust toolchain and necessary targets on

the first

cargoinvocation. You may also need to add the RISC-V target for building guest programs manually:rustup target add riscv64imac-unknown-none-elf.

mdBook

To build this book from source:

cargo install mdbook mdbook-katex

For watching the changes in your local browser:

mdbook watch book --open

Guests and Hosts

Guests and hosts are the main concepts that we need to understand in order to use Jolt as an application developer. Guests contain the functions that Jolt will prove, while hosts are where we invoke the prover and verifier for our guest functions.

Guests

Guests contain functions for Jolt to prove. Making a function provable is as easy as ensuring it is inside the guest package and adding the jolt::provable macro above it.

Let's take a look at a simple guest program to better understand it.

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] fn main() { #[jolt::provable] fn add(x: u32, y: u32) -> u32 { x + y } }

As we can see, the guest looks like a normal no_std Rust library. The only major change is the addition of the jolt::provable macro, which lets Jolt know of the function's existence. The only requirement of these functions is that its inputs are serializable and outputs are deserializable with serde. Fortunately serde is prevalent throughout the Rust ecosystem, so most types will support it by default.

There is no requirement that just a single function lives within the guest, and we are free to add as many as we need. Additionally, we can import any no_std compatible library just as we normally would in Rust.

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] fn main() { use sha2::{Sha256, Digest}; use sha3::{Keccak256, Digest}; #[jolt::provable] fn sha2(input: &[u8]) -> [u8; 32] { let mut hasher = Sha256::new(); hasher.update(input); let result = hasher.finalize(); Into::<[u8; 32]>::into(result) } #[jolt::provable] fn sha3(input: &[u8]) -> [u8; 32] { let mut hasher = Keccak256::new(); hasher.update(input); let result = hasher.finalize(); Into::<[u8; 32]>::into(result) } }

Prover and Verifier Views of Guest Program

A guest program consists of two main components: program code and inputs. Both the prover and verifier know the program code (and how it compiles to RISC-V instructions).

Jolt supports three types of inputs:

-

Public Input: These are inputs known to both the prover and verifier. The prover proves that given the bytecode and these inputs, the claimed output is correct.

-

Untrusted Advice: These inputs are known to the prover for generating the proof, but the verifier does not know them for the purpose of verification. Here, the prover proves that there exists some input that produces the claimed output.

-

Trusted Advice: Similar to untrusted advice, but the verifier has a commitment to the input generated by an external party (not the prover). The prover proves that there exists an input matching the given commitment that, when executed with the program code, produces the claimed output.

Important: While the verifier does not see advice inputs, advice inputs (both trusted and untrusted) do not currently support zero-knowledge properties and are not designed for that purpose. Their main purpose is to allow the verifier to verify an execution proof without receiving the advice inputs themselves.

A program can use any combination of these three input types. In guest code, you can define trusted inputs using jolt::TrustedAdvice<input_type> and untrusted inputs using jolt::UntrustedAdvice<input_type>. To access the data inside these wrappers, simply use deref(). These wrappers serve solely to indicate to the macro expander how to handle each input type and generate the appropriate functions for both prover and verifier.

For a complete example of advice inputs, see the merkle-tree example.

Advice inputs vs. runtime advice

Advice inputs described above are host-provided: the host supplies the data before execution, and it is written into a preallocated memory region that the guest reads from. The guest program runs once, and the advice values are fixed before execution begins.

Runtime advice is a separate mechanism where the guest itself computes advice values during a first-pass execution, and those values are fed back via an advice tape for the proving pass. This is useful when the advice depends on the guest's own execution (e.g. computing a modular inverse or finding factors) and checking the result is cheaper than computing it. Runtime advice uses #[jolt::advice] functions rather than UntrustedAdvice<T> / TrustedAdvice<T> parameters on #[jolt::provable].

Both mechanisms use the UntrustedAdvice<T> wrapper to signal that the data is untrusted and must be verified by the guest, but they differ in where the data originates and how it is serialized (advice inputs use serde; runtime advice uses AdviceTapeIO).

Standard Library

Jolt supports the Rust standard library. To enable support, simply add the guest-std feature to the Jolt import in the guest's Cargo.toml file and remove the #![cfg_attr(feature = "guest", no_std)] directive from the guest code.

Example

#![allow(unused)] fn main() { [package] name = "guest" version = "0.1.0" edition = "2021" [features] guest = [] [dependencies] jolt = { package = "jolt-sdk", git = "https://github.com/a16z/jolt", features = ["guest-std"] } }

#![allow(unused)] fn main() { #[jolt::provable] fn int_to_string(n: i32) -> String { n.to_string() } }

alloc

Jolt provides an allocator which supports most containers such as Vec and Box. This is useful for Jolt users who would like to write no_std code rather than using Jolt's standard library support. To use these containers, they must be explicitly imported from alloc. The alloc crate is automatically provided by rust and does not need to be added to the Cargo.toml file.

Example

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] fn main() { extern crate alloc; use alloc::vec::Vec; #[jolt::provable] fn alloc(n: u32) -> u32 { let mut v = Vec::<u32>::new(); for i in 0..100 { v.push(i); } v[n as usize] } }

Print statements

Jolt supports standard print! and println! macros in guest programs.

Example

#![allow(unused)] fn main() { #[jolt::provable(heap_size = 10240, max_trace_length = 65536)] fn int_to_string(n: i32) -> String { print!("Hello, "); println!("from int_to_string({n})!"); n.to_string() } }

The printed strings are written to stdout during RISC-V emulation of the guest.

Optimization level

By default, the guest program is compiled with optimization level 3 to attempt to minimize the number of RISC-V instructions executed during emulation.

To change this optimization level, set the JOLT_GUEST_OPT environment variable to one of the allowed values: 0, 1, 2, 3, s, or z.

A common choice is z which optimizes for code size, at the cost of a larger number of instructions executed.

Hosts

Hosts are where we can invoke the Jolt prover to prove functions defined within the guest.

The host imports the guest package, and will have automatically generated functions to build each of the Jolt functions. For the SHA3 example we looked at in the guest section, the jolt::provable procedural macro generates several functions that can be invoked from the host (shown below):

compile_sha3(target_dir)to compile the SHA3 guest to RISC-Vpreprocess_prover_sha3andverifier_preprocessing_from_prover_sha3to generate the prover and verifier preprocessing. Note that the preprocessing only needs to be generated once for a given guest program, and can subsequently be reused to prove multiple invocations of the guest.build_prover_sha3returns a closure for the prover, which takes in the same input types as the original function and modifies the output to additionally include a proof.build_verifier_sha3returns a closure for the verifier, which verifies the proof. The verifier closure's parameters comprise of the program input, the claimed output, aboolvalue claiming whether the guest panicked, and the proof.

pub fn main() { let target_dir = "/tmp/jolt-guest-targets"; let mut program = guest::compile_sha3(target_dir); let prover_preprocessing = guest::preprocess_prover_sha3(&mut program); let verifier_preprocessing = guest::verifier_preprocessing_from_prover_sha3(&prover_preprocessing); let prove_sha3 = guest::build_prover_sha3(program, prover_preprocessing); let verify_sha3 = guest::build_verifier_sha3(verifier_preprocessing); let input: &[u8] = &[5u8; 32]; let now = Instant::now(); let (output, proof, program_io) = prove_sha3(input); println!("Prover runtime: {} s", now.elapsed().as_secs_f64()); let is_valid = verify_sha3(input, output, program_io.panic, proof); println!("output: {}", hex::encode(output)); println!("valid: {is_valid}"); }

Runtime Advice

Runtime advice allows guest programs to offload expensive computations to the prover and receive the results via an advice tape. The guest then verifies the result cheaply, rather than recomputing it from scratch. This can dramatically reduce cycle counts for operations like modular inversion, factoring, or any computation where checking a result is cheaper than computing it.

Runtime advice is distinct from the advice inputs (TrustedAdvice<T> / UntrustedAdvice<T> parameters on #[jolt::provable]), which are host-provided data fixed before execution begins. With runtime advice, the guest itself computes the values during a first-pass execution, and those values are replayed from the advice tape during the proving pass. Use advice inputs when the host already knows the auxiliary data; use runtime advice when the data depends on the guest's own logic and checking is cheaper than computing.

How it works

Jolt uses a two-pass execution model for advice:

- First pass (compute_advice): The guest is compiled with the

compute_advicefeature flag. Advice functions execute their body and write results to the advice tape. - Second pass (proving): The guest is compiled without

compute_advice. Advice functions read precomputed results from the advice tape instead of recomputing them.

The prover handles both passes automatically. The guest code only needs to define advice functions and verify their outputs.

Defining advice functions

Annotate a function with #[jolt::advice]. The function must return jolt::UntrustedAdvice<T>, where T implements AdviceTapeIO.

#![allow(unused)] fn main() { use jolt::AdviceTapeIO; #[jolt::advice] fn modinv_advice(a: u64, m: u64) -> jolt::UntrustedAdvice<(u64, u64)> { // This body only runs during the compute_advice pass. // During proving, the result is read from the advice tape. let inv = extended_gcd(a, m); let quo = (a as u128 * inv as u128 / m as u128) as u64; (inv, quo) } }

Requirements:

- The return type must be

jolt::UntrustedAdvice<T> - Arguments must be immutable (no

mutor&mut) Tmust implementAdviceTapeIO(see Supported types below)

Verifying advice

Advice values are untrusted -- the prover could supply arbitrary data. The guest must verify correctness using check_advice! or check_advice_eq!. These macros emit prover-enforced assertions: if the condition is false, proof generation fails.

#![allow(unused)] fn main() { #[jolt::provable] fn modinv(a: u64, m: u64) -> u64 { let adv = modinv_advice(a, m); let (inv, quo) = *adv; // Deref to access the inner value // Verify: a * inv ≡ 1 (mod m) let product = (a as u128) * (inv as u128) - (quo as u128) * (m as u128); jolt::check_advice!(product == 1u128 && inv < m); inv } }

check_advice_eq! is a specialization for equality checks that directly compares two register-sized values, saving a few instructions compared to check_advice!:

#![allow(unused)] fn main() { jolt::check_advice_eq!(a * b, n, "incorrect factors"); jolt::check_advice!(1 < a && a <= b && b < n, "factors out of range"); }

Both macros accept an optional error message string as the last argument. The message is used in assert!/assert_eq! on non-RISC-V targets (useful for debugging) but is stripped from the guest binary.

Supported types

The AdviceTapeIO trait controls how values are serialized to and from the advice tape. Built-in implementations are provided for:

| Type | Notes |

|---|---|

u8, u16, u32, u64, usize | Primitive integers |

i8, i16, i32, i64 | Signed integers |

[T; N] where T: Pod | Fixed-size arrays |

(A, B), ..., (A, B, C, D, E, F, G) | Tuples up to 7 elements |

Vec<T> where T: Pod | Requires guest-std feature |

Custom structs

For structs composed of supported types, you can implement AdviceTapeIO manually:

#![allow(unused)] fn main() { struct Pair { x: u64, y: u64, } impl jolt::AdviceTapeIO for Pair { fn write_to_advice_tape(&self) { self.x.write_to_advice_tape(); self.y.write_to_advice_tape(); } fn new_from_advice_tape() -> Self { Pair { x: u64::new_from_advice_tape(), y: u64::new_from_advice_tape(), } } } }

JoltPod: automatic AdviceTapeIO via bytemuck

For #[repr(C)] structs where all fields are plain-old-data, you can derive AdviceTapeIO automatically using bytemuck and the JoltPod marker trait:

#![allow(unused)] fn main() { use bytemuck_derive::{Pod, Zeroable}; use jolt::JoltPod; #[derive(Copy, Clone, Pod, Zeroable)] #[repr(C)] struct Point { x: u32, y: u32, } impl JoltPod for Point {} }

JoltPod types get a blanket AdviceTapeIO implementation that uses bytemuck for zero-copy serialization. This works for nested structs too, as long as all types in the hierarchy are Pod.

Guest setup

The guest Cargo.toml must include a compute_advice feature:

[package]

name = "guest"

version = "0.1.0"

edition = "2021"

[features]

guest = []

compute_advice = []

[dependencies]

jolt = { package = "jolt-sdk", git = "https://github.com/a16z/jolt" }

The compute_advice feature is used by the SDK to build the first-pass binary. You do not need to activate it yourself.

Full example

Guest (guest/src/lib.rs):

#![allow(unused)] fn main() { use jolt::AdviceTapeIO; /// Compute factors of n via advice (expensive trial division runs outside the proof) #[jolt::advice] fn factor(n: u32) -> jolt::UntrustedAdvice<[u32; 2]> { for i in 2..=n { if n % i == 0 { return [i, n / i]; } } [1, n] } /// Prove that n is composite by obtaining and verifying its factors via advice #[jolt::provable] fn verify_composite(n: u32) { let adv = factor(n); let [a, b] = *adv; // Verify the factors are correct and non-trivial jolt::check_advice_eq!((a as u64) * (b as u64), n as u64); jolt::check_advice!(1 < a && a <= b && b < n); } }

Host (src/main.rs):

pub fn main() { let target_dir = "/tmp/jolt-guest-targets"; let mut program = guest::compile_verify_composite(target_dir); let shared_preprocessing = guest::preprocess_shared_verify_composite(&mut program); let prover_preprocessing = guest::preprocess_prover_verify_composite(shared_preprocessing.clone()); let verifier_setup = prover_preprocessing.generators.to_verifier_setup(); let verifier_preprocessing = guest::preprocess_verifier_verify_composite(shared_preprocessing, verifier_setup); let prove = guest::build_prover_verify_composite(program, prover_preprocessing); let verify = guest::build_verifier_verify_composite(verifier_preprocessing); let n = 221u32; // 13 * 17 let (output, proof, program_io) = prove(n); let is_valid = verify(n, output, program_io.panic, proof); assert!(is_valid); }

The host code is identical to any other Jolt program. The two-pass advice mechanism is handled transparently by the compile_* and build_prover_* functions generated by the #[jolt::provable] macro.

Profiling

If you're an application developer, you may want to profile your guest program: fewer cycles = faster proof. If so, check out Guest profiling.

If you're contributing to Jolt, you may be interested in profiling Jolt itself, in which case you should check out zkVM profiling

Profiling Jolt

Execution profiling

Jolt is instrumented using tokio-rs/tracing for execution profiling.

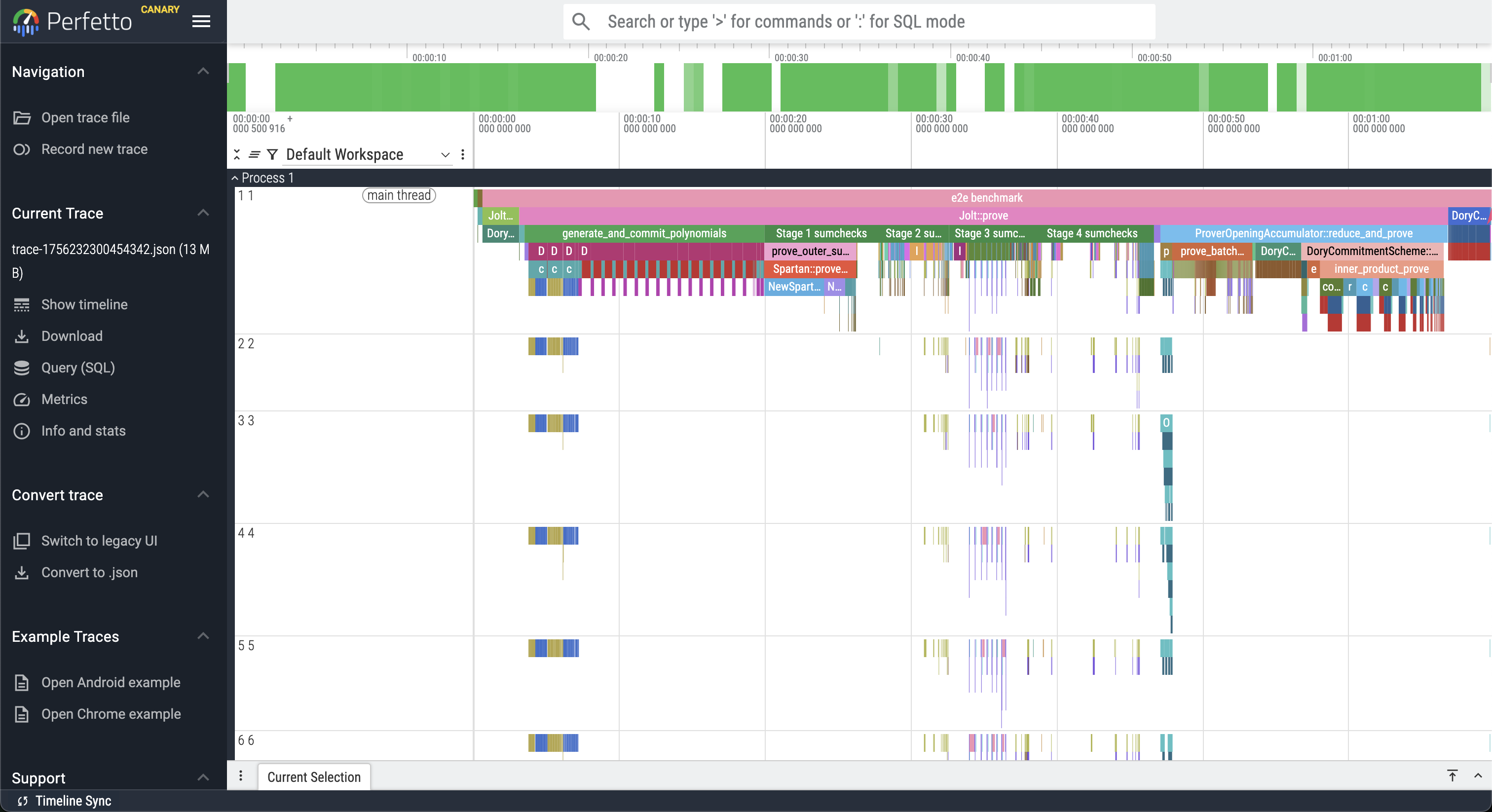

We use the tracing_chrome crate to output traces in the format expected by Perfetto.

To generate a trace, run e.g.

cargo run --release -p jolt-core profile --name sha3 --format chrome

Where --name can be sha2, sha3, sha2-chain, fibonacci, or btreemap. The corresponding guest programs can be found in the examples directory. The benchmark inputs are provided in bench.rs.

The above command will output a JSON file in the workspace rootwith a name trace-<timestamp>.json, which can be viewed in Perfetto:

System resource monitoring

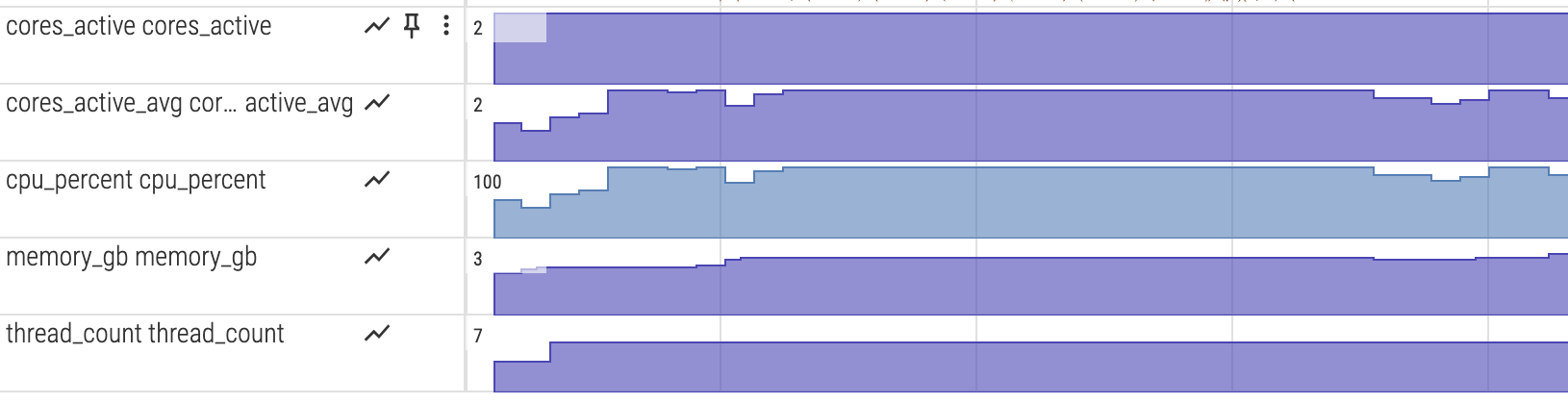

To visualize CPU and memory usage alongside the execution trace, enable the monitor feature:

cargo run --release --features monitor -p jolt-core profile --name sha3 --format chrome

python3 scripts/postprocess_trace.py trace-*.json

The postprocessing step converts the metrics into counter tracks for Perfetto.

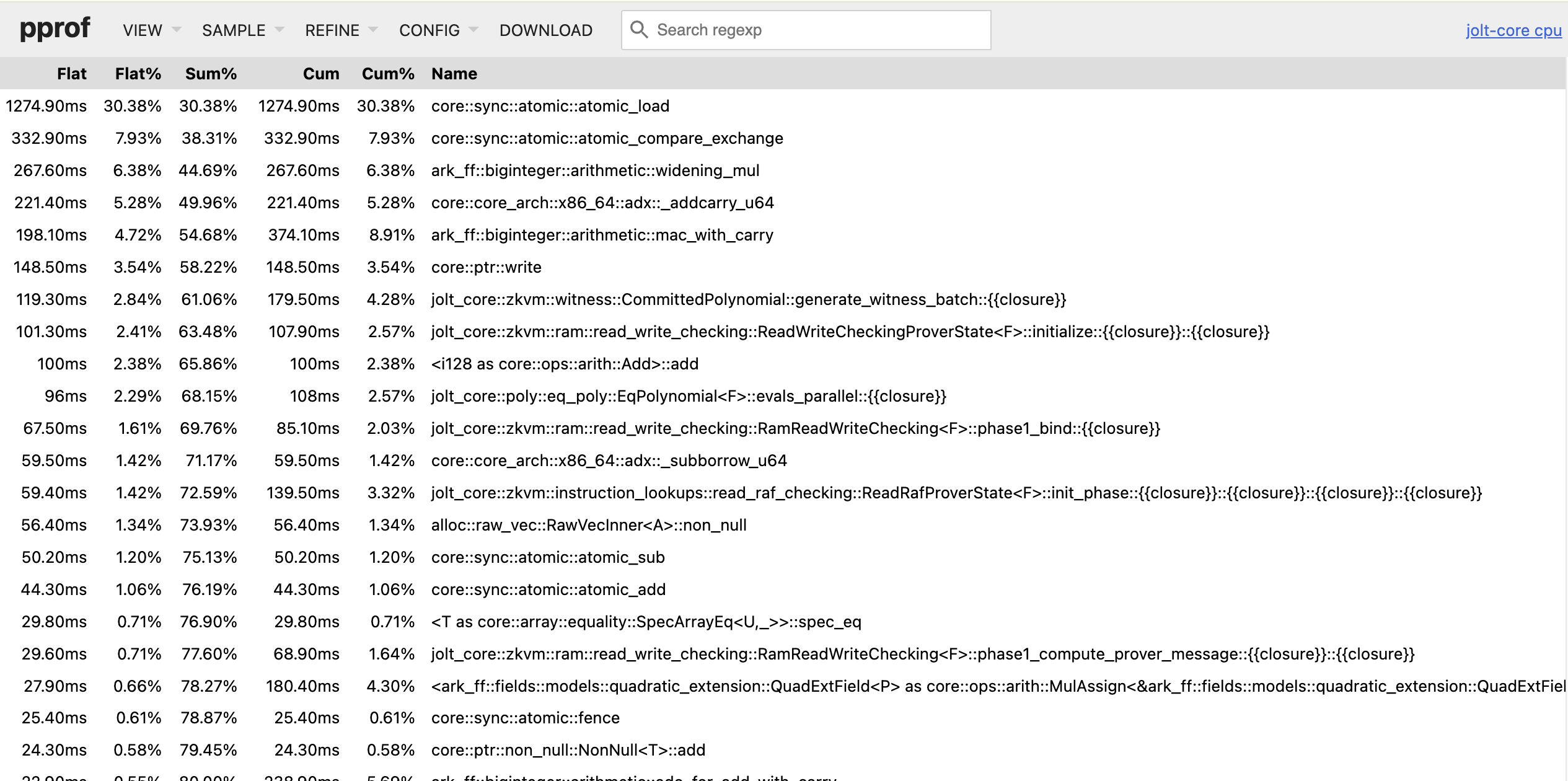

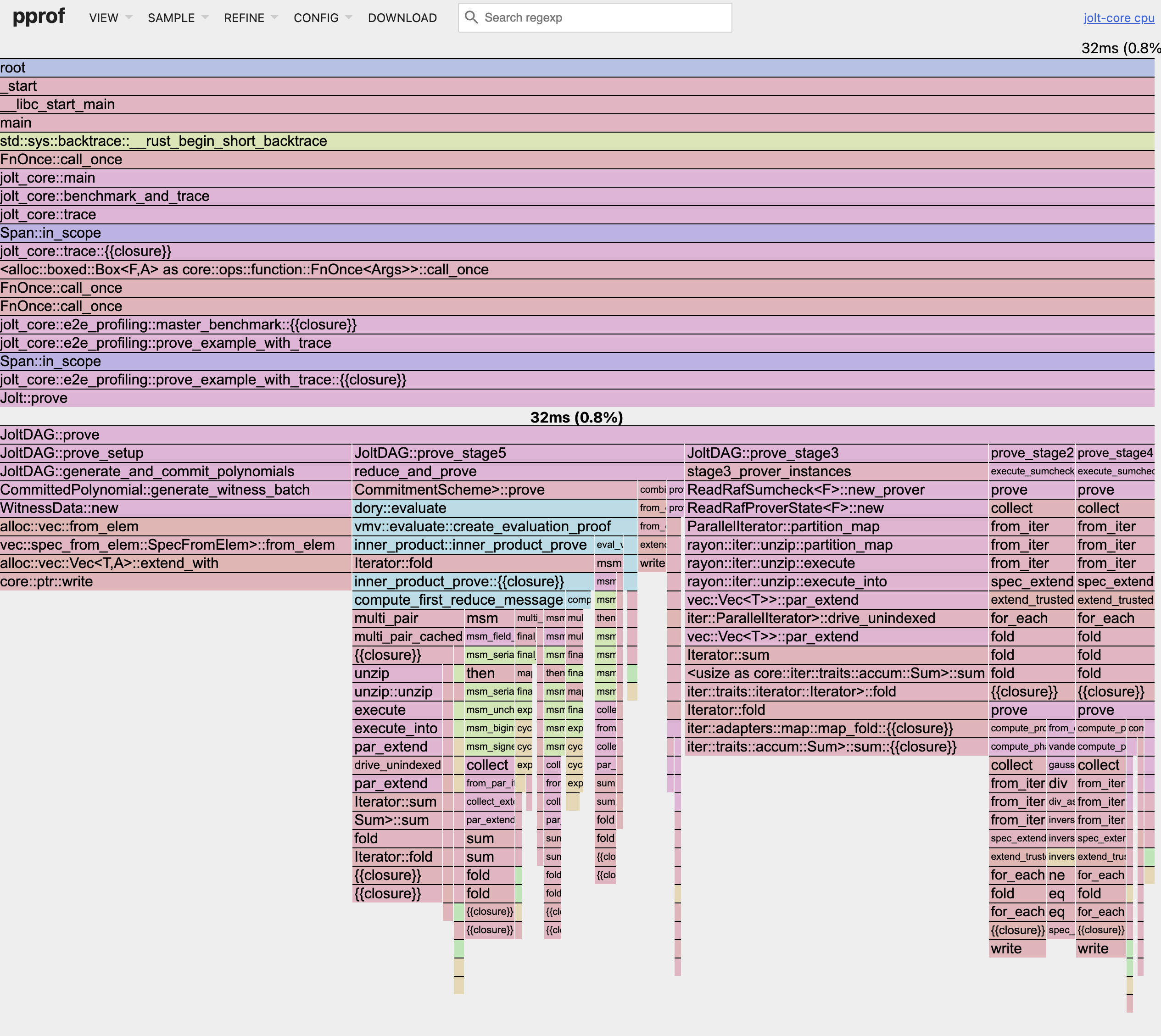

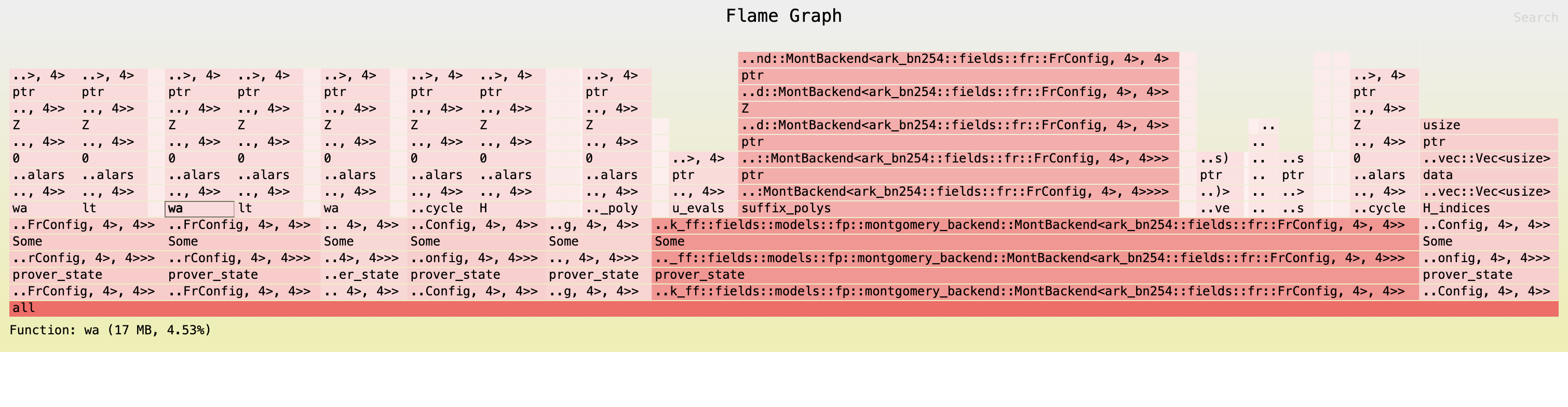

Fine-grained CPU profiling with pprof

When tracing is insufficiently detailed, you can enable pprof for fine-grained CPU profiling. While execution tracing shows you the high-level stages and their durations (based on manually instrumented code), pprof automatically samples your entire program at the function level to capture each function call including in dependencies. This can help reveal performance bottlenecks that tracing might miss, such as unexpected hotspots in serialization, memory allocation, or cryptographic operations.

To enable pprof profiling, add the --features pprof flag:

cargo run --release --features pprof -p jolt-core profile --name sha3 --format chrome

This will generate multiple .pb profile files in benchmark-runs/pprof/, one for each major stage.

To view the proving profile in your browser using pprof, run:

go tool pprof -http=:8080 target/release/jolt-core benchmark-runs/pprof/sha3_prove.pb

This will start a web server at http://localhost:8080 where you can explore:

- Flame graphs - Visual representation of call stacks and time spent

- Top functions - List of functions consuming the most CPU time

- Source view - Line-by-line breakdown of CPU usage

- Call graph - Function call relationships

You may need to increase the sampling frequency to get a more detailed profile for shorter traces or decrease it for longer tracesto reduce overhead.You may customize the sampling frequency using the PPROF_FREQ environment variable (default: 100 Hz):

PPROF_FREQ=1000 cargo run --release --features pprof -p jolt-core profile --name sha3 --format chrome

Memory profiling

Jolt uses allocative for memory profiling.

Allocative allows you to (recursively) measure the total heap space occupied by any data structure implementing the Allocative trait, and optionally generate a flamegraph.

In Jolt, most sumcheck data structures implement the Allocative trait, and we generate a flamegraph at the start and end of stages 2-7 of the Jolt DAG (see jolt_dag.rs).

To generate allocative output, run:

RUST_LOG=debug cargo run --release --features allocative -p jolt-core profile --name sha3 --format chrome

Where, as above, --name can be sha2, sha3, sha2-chain, fibonacci, or btreemap.

The above command will log memory usage info to the command line and output multiple SVG files, e.g. stage3_start_flamechart.svg, which can be viewed in a web browser of your choosing:

Guest Profiling

This section details the available tools to profile guest programs. There is currently a single utility available: Cycle-Tracking, which is detailed below.

Cycle-Tracking in Jolt

Measure real (RV64IMAC) and virtual cycles inside your RISC-V guest code with zero-overhead markers. This is useful when analyzing the mapping between the high-level guest program and the equivalent compiled program to be proven by Jolt.

Note: The Rust compiler will often shuffle around your implementation for optimization purposes, which can affect cycle tracking. If you suspect the compiler is interfering with profiling, then use the Hint module. For example, wrap values in

core::hint::black_box()(see below) to help keep your measurements honest.

API

| Function | Effect |

|---|---|

start_cycle_tracking(label: &str) | Begin a span tagged with label |

end_cycle_tracking(label: &str) | End the span started with the same label |

Under the hood each marker emits an ECALL signaling the emulator to track your code segment.

Example

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] fn main() { use jolt::{start_cycle_tracking, end_cycle_tracking}; #[jolt::provable] fn fib(n: u32) -> u128 { let (mut a, mut b) = (0, 1); start_cycle_tracking("fib_loop"); for _ in 1..n { let sum = a + b; a = b; b = sum; } end_cycle_tracking("fib_loop"); b } }

Hinting the compiler

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] fn main() { use core::hint::black_box; #[jolt::provable] fn muldiv(a: u32, b: u32, c: u32) -> u32 { use jolt::{end_cycle_tracking, start_cycle_tracking}; start_cycle_tracking("muldiv"); let result = black_box(a * b / c); // use black_box to keep code in place end_cycle_tracking("muldiv"); result } }

Wrap inputs or outputs that must stay observable during the span. In the above example, a * b / c gets moved to the return line without black_box(), causing inaccurate measurements. To run the above example, use cargo run --release -p muldiv.

Expected Output

"muldiv": 9 RV64IMAC cycles, 16 virtual cycles

Trace length: 533

Prover runtime: 0.487551667 s

output: 2223173

valid: true

Troubleshooting

Insufficient Memory or Stack Size

Jolt provides reasonable defaults for the total allocated memory and stack size. It is however possible that the defaults are not sufficient, leading to unpredictable errors within our tracer. To fix this we can try to increase these sizes. We suggest starting with the stack size first as this is much more likely to run out.

Below is an example of manually specifying both the total memory and stack size.

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] #![no_main] fn main() { extern crate alloc; use alloc::vec::Vec; #[jolt::provable(stack_size = 10000, heap_size = 10000000)] fn waste_memory(size: u32, n: u32) { let mut v = Vec::new(); for i in 0..size { v.push(i); } } }

Maximum Input or Output Size Exceeded

Jolt restricts the size of the inputs and outputs to 4096 bytes by default. Using inputs and outputs that exceed this size will lead to errors. These values can be configured via the macro.

#![allow(unused)] #![cfg_attr(feature = "guest", no_std)] #![no_main] fn main() { #[jolt::provable(max_input_size = 10000, max_output_size = 10000)] fn sum(input: &[u8]) -> u32 { let mut sum = 0; for value in input { sum += *value as u32; } sum } }

Guest Attempts to Compile Standard Library

Sometimes after installing the toolchain the guest still tries to compile with the standard library which will fail with a large number of errors that certain items such as Result are referenced and not available. This generally happens when one tries to run jolt before installing the toolchain. To address, try rerunning jolt install-toolchain, restarting your terminal, and delete both your rust target directory and any files under /tmp that begin with jolt.

Guest Fails to Compile on the Host

By default, Jolt will attempt to compile the guest for the host architecture. This is useful if you want to run and test the guest's tagged functions directly. If you know your guest code cannot compile on the host (for example, if your guest uses inline RISCV assembly), you can specify to only build for the guest architecture.

#![allow(unused)] fn main() { #[jolt::provable(guest_only)] fn inline_asm() -> (i32, u32, i32, u32) { use core::arch::asm; let mut data: [u8; 8] = [0; 8]; unsafe { let ptr = data.as_mut_ptr(); // Store Byte (SB instruction) asm!( "sb {value}, 0({ptr})", ptr = in(reg) ptr, value = in(reg) 0x12, ); } } }

Null Pointer Write / "Unknown memory mapping: 0x0"

If you see Null pointer write detected (store to 0x0) or Illegal device store: Unknown memory mapping: 0x0, it means your guest program crashed. This happens when musl's abort() cannot deliver a signal and falls back to writing to a null pointer.

The most common cause is a missing jolt-sdk feature. If your guest uses:

- rayon or threading — add

"thread"to your jolt-sdk features - randomness (getrandom) — add

"random"to your jolt-sdk features

[dependencies]

jolt = { package = "jolt-sdk", features = ["guest-std", "thread", "random"] }

To diagnose which syscall is failing, add the "debug" feature to enable syscall logging:

jolt = { package = "jolt-sdk", features = ["guest-std", "debug"] }

This prints every syscall the guest makes (e.g. [syscall] SYS_clone), which helps identify which capability is missing.

Release runs with fat LTO fail a lookup-table test

If cargo nextest run --release -p jolt-core fails at:

'zkvm::lookup_table::equal::test::prefix_suffix' panicked at jolt-core/src/zkvm/lookup_table/test.rs:108:17:

assertion `left == right` failed

this appears to be a fat LTO miscompile in the current compiler pass pipeline. This class of miscompilations is generally tracked in rust-lang/rust#116941.

Workarounds

- Prefer thin LTO (default in

Cargo.toml):profile.release.lto = "thin"

- If you must keep fat LTO, disable LLVM prepopulate passes (use Cargo’s profile override

to avoid applying LTO to proc-macro/build scripts):

CARGO_PROFILE_RELEASE_LTO=fat RUSTFLAGS="-C no-prepopulate-passes"

These avoid the miscompile while keeping release runs functional.

LTO Configuration Details

We have investigated three primary LTO configurations.

1. lto = "fat" (Problematic)

Enabling "fat" LTO without additional flags triggers a compiler miscompilation in the current toolchain.

To reproduce:

CARGO_PROFILE_RELEASE_LTO=fat cargo nextest run --release -p jolt-core -E 'test(=zkvm::lookup_table::equal::test::prefix_suffix)'

You will see an error similar to:

thread 'zkvm::lookup_table::equal::test::prefix_suffix' panicked at jolt-core/src/zkvm/lookup_table/test.rs:108:17:

assertion `left == right` failed

left: 3421757210433941145757981284077922153733430471292915370254709621273756639318

right: 15993602859885613318374216934674599210221976492830069114166327961258944178919

2. lto = "thin" (Recommended)

The above error can be fixed by setting lto = "thin". You can verify with:

CARGO_PROFILE_RELEASE_LTO=thin cargo nextest run --release -p jolt-core -E 'test(=zkvm::lookup_table::equal::test::prefix_suffix)'

3. lto = "fat" with -C no-prepopulate-passes

If you must use fat LTO, adding RUSTFLAGS="-C no-prepopulate-passes" prevents the miscompilation described above. However, this flag alters the guest ELF code generation, which can increase the execution trace length slightly.

This may cause tests with tight trace length limits—such as advice_e2e_dory—to fail.

To reproduce:

CARGO_PROFILE_RELEASE_LTO=fat RUSTFLAGS="-C no-prepopulate-passes" cargo nextest run --release -p jolt-core -E 'test(=zkvm::prover::tests::advice_e2e_dory)'

You will see a trace length error:

thread 'zkvm::prover::tests::advice_e2e_dory' panicked at jolt-core/src/zkvm/prover.rs:336:13:

Execution trace length (105501 cycles, padded to 131072) exceeds max_trace_length (65536) configured in MemoryConfig. Increase max_trace_length to at least 131072.

To fix this, simply increase the max_trace_length in the failing test setup. For example, in advice_e2e_dory:

let shared_preprocessing = JoltSharedPreprocessing::new(

bytecode.clone(),

io_device.memory_layout.clone(),

init_memory_state,

- 1 << 16,

+ 1 << 17,

);

Getting Help

If none of the above solve the problem, please create a Github issue with a detailed bug report including the Jolt commit hash, the hardware or container configuration used, and a minimal guest program to reproduce the bug.

How it works

This section dives into in the inner workings of Jolt, beyond what an application developer needs to know. It covers the architecture, design decisions, and implementation details of Jolt. Proceed if you:

-

have been poking around the Jolt codebase and have questions about why something is implemented the way it is

-

have read some papers (Jolt, Twist/Shout) and want to know how things fit together in practice

-

would like to start contributing to Jolt

This section is comprised of the following subsections:

Architecture overview

This section gives a overview of the architecture of the Jolt codebase.

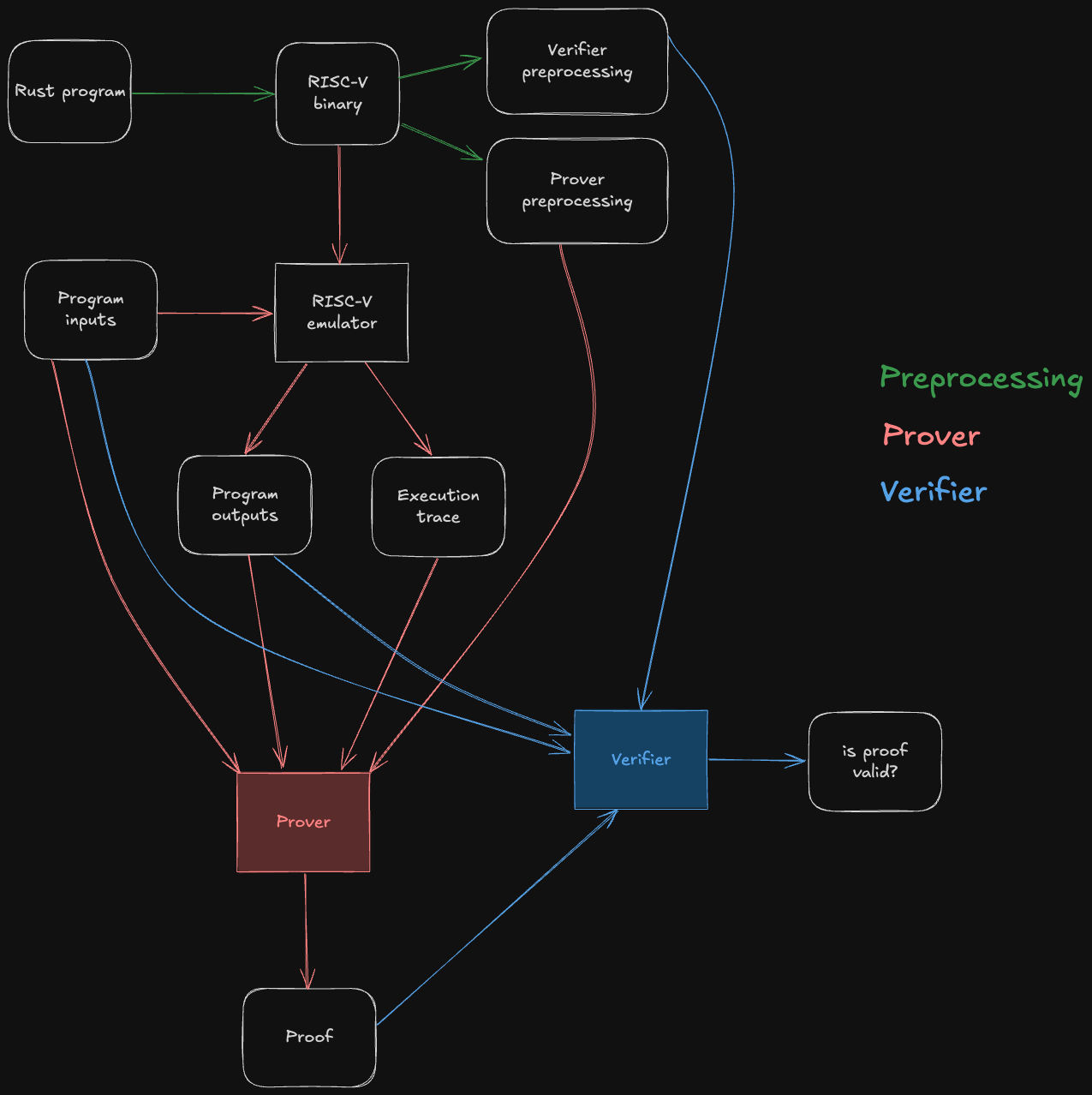

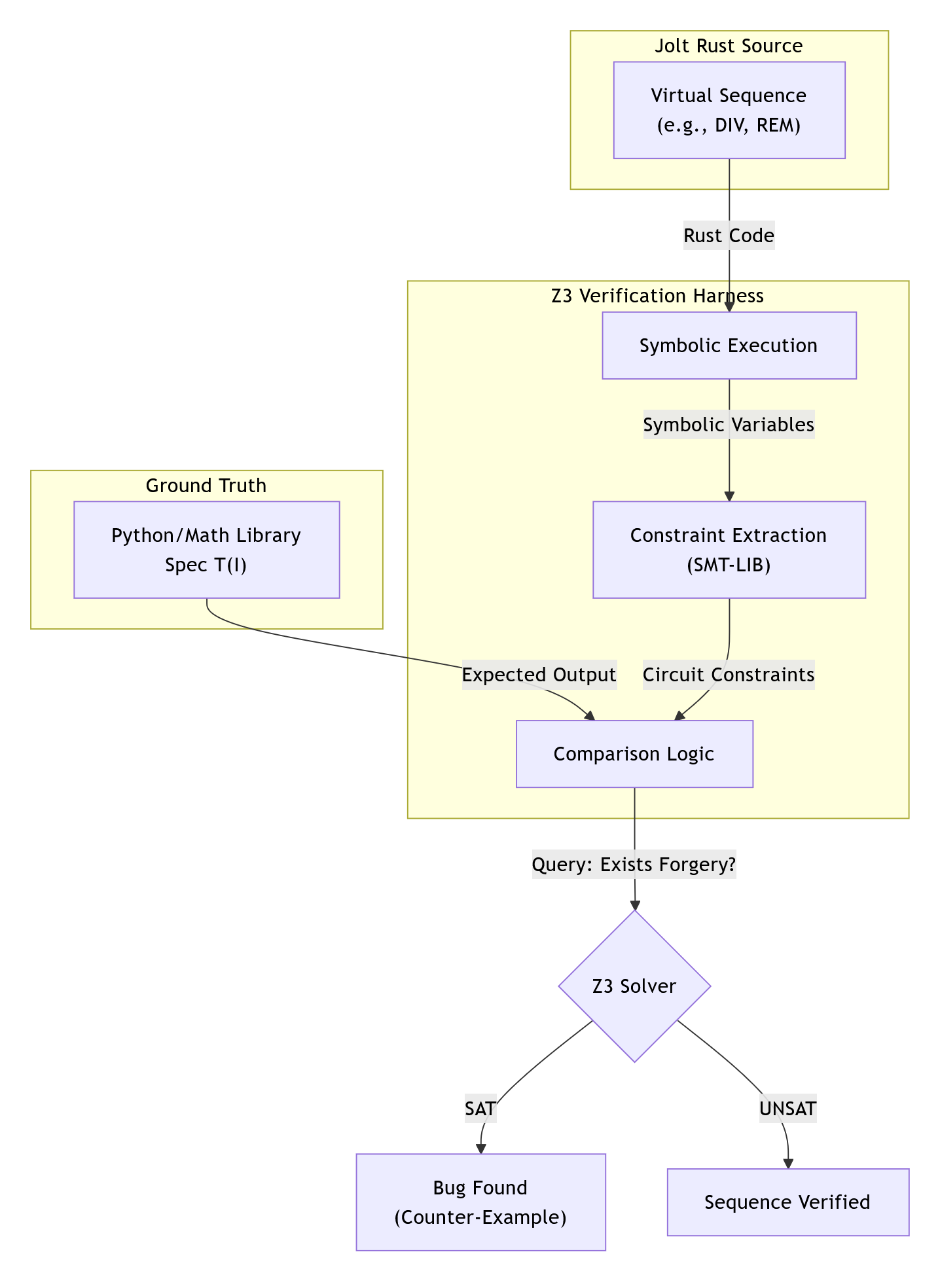

The following diagram depicts the end-to-end Jolt pipeline, which is typical of RISC-V zkVMs.

The green steps correspond to preprocessing steps, which can be computed ahead of time from the guest program's bytecode. Note that they do not depend on the program inputs, so the preprocessing data can be reused across multiple invocations of the Jolt prover/verifier.

The red steps correspond to the prover's workflow: it runs the guest program on some inputs inside of a RISC-V emulator, producing the output of the program as well as an execution trace. This execution trace, along with the inputs, outputs, and preprocessing, are then passed to the Jolt prover, which uses them to generate a succinct proof that the outputs are the result of correctly executing the given guest program on the inputs.

The blue steps correspond to the verifier's workflow. The verifier does not emulate the guest program, not does it receive the execution trace (which may be billions of cycles long) from the prover. Instead, it receives the proof from the prover, and uses it to check that the guest program produces claimed outputs when run on the given inputs.

For the sake of readability, the diagram above abstracts away all of Jolt's proof system machinery. The rest of this section aims to disassemble the underlying machinery in useful-but-not-overwhelming detail.

As a starting point, we will present two useful mental models of the Jolt proof system: Jolt as a CPU, and Jolt as a DAG (a different DAG than the one above; we love DAGs).

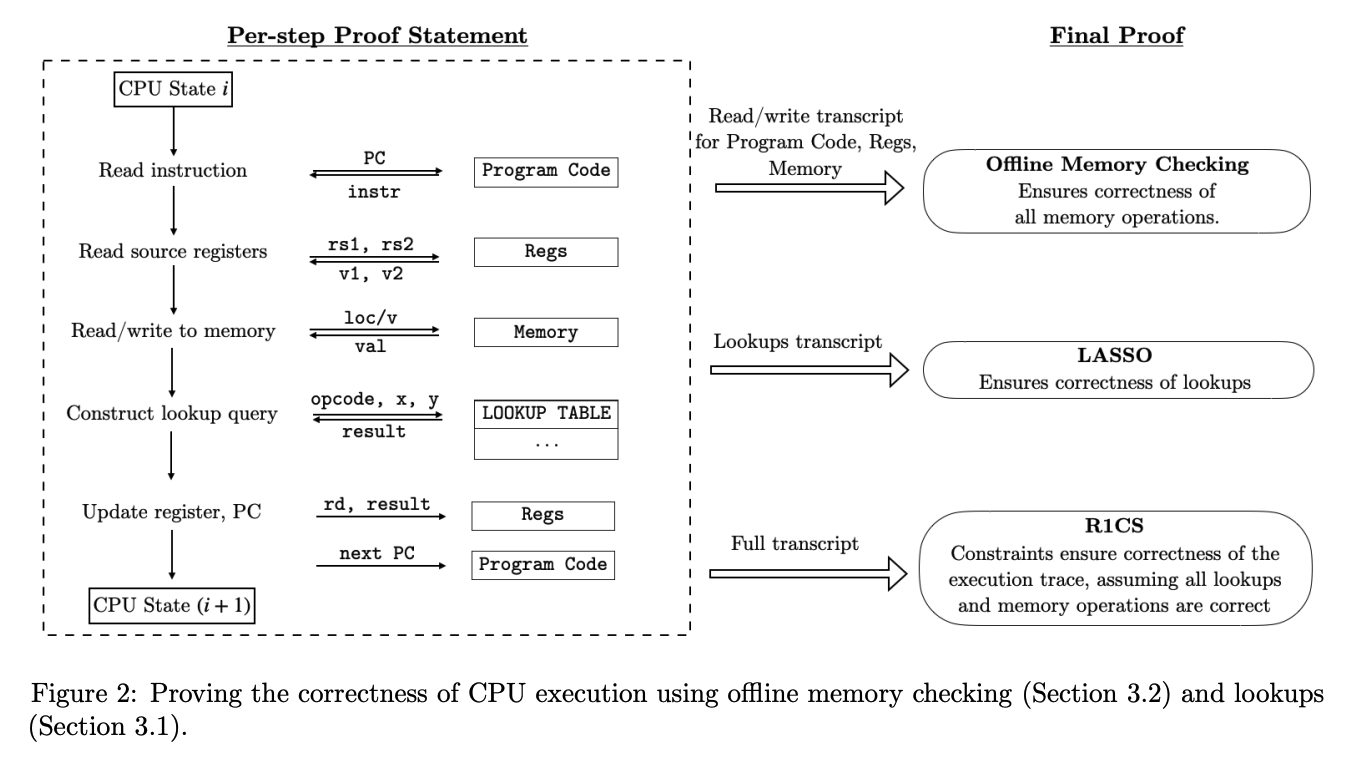

Jolt as a CPU

One way to understand Jolt is to map the components of the proof system to the components of the virtual machine (VM) whose functionality they prove.

Jolt's five components

A VM does two things:

- Repeatedly execute the fetch-decode-execute logic of its instruction set architecture.

- Perform reads and writes to memory.

The Jolt paper depicts these two tasks mapped to three components in the Jolt proof system:

The Jolt codebase is similarly organized, but instead distinguishes read-write memory (comprising registers and RAM) from program code (aka bytecode, which is read-only), for a total of five components:

Note that in Jolt as described in the paper, as well as in the first version of the Jolt implementation (v0.1.0), the memory checking and lookup argument used are Spice and Lasso, respectively. As of v0.2.0, Jolt replaces Spice and Lasso with Twist and Shout, respectively.

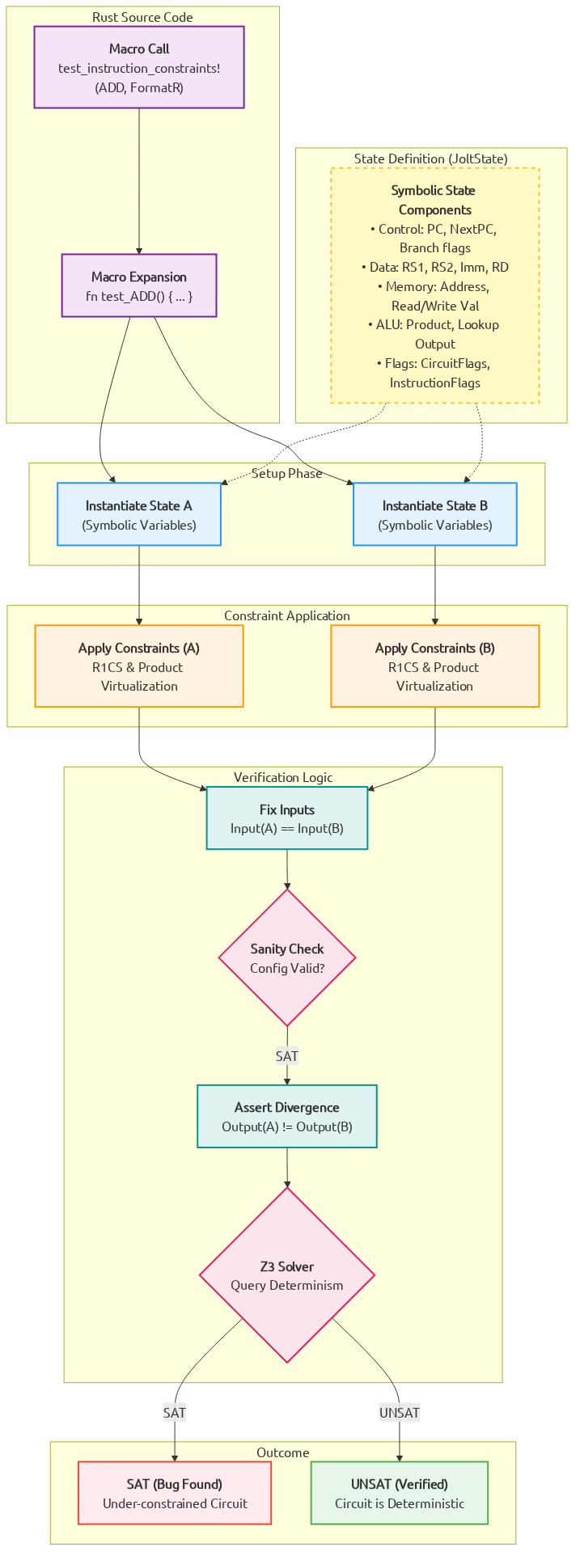

R1CS constraints

To handle the "fetch" part of the fetch-decode-execute loop, there is a minimal R1CS instance (about 20 constraints per cycle of the RISC-V VM). These constraints handle program counter (PC) updates and serves as the "glue" enforcing consistency between polynomials used in the components below. Jolt uses Spartan, optimized for the highly-structured nature of the constraint system (i.e. the same small set of constraints are applied to every cycle in the execution trace).

For more details: R1CS constraints

RAM

To handle reads/writes to RAM Jolt uses the Twist memory checking argument.

For more details: RAM

Registers

Similar to RAM, Jolt uses the Twist memory checking argument to handle reads/writes to registers.

For more details: Registers

Instruction execution

To handle the "execute" part of the fetch-decode-execute loop, Jolt invokes the Shout lookup argument. The lookup table, in this case, effectively maps every instruction to its correct output.

For more details: Instruction execution

Bytecode

To handle the "decode" part of the fetch-decode-execute loop, Jolt uses another instance of Shout. The bytecode of the guest program is "decoded" in preprocessing, and the prover subsequently invokes offline memory-checking on the sequence of reads from this decoded bytecode corresponding to the execution trace being proven.

For more details: Bytecode

Jolt as a DAG

One useful way to understand Jolt is to view it as a directed acyclic graph (DAG). That might sound surprising -- how can a zkVM be represented as a DAG? The key lies in the structure of its sumchecks, and in particular, the role of virtual polynomials.

Virtual vs. Committed Polynomials

Virtual polynomials, a concept introduced in the Binius paper, are used heavily in Jolt.

A virtual polynomial is a part of the witness that is never committed directly. Instead, any claimed evaluation of a virtual polynomial is proven by a subsequent sumcheck. In contrast, committed polynomials are committed to explicitly and their evaluaions are proven using the opening proof of the PCS.

Sumchecks as Nodes

Each sumcheck in Jolt corresponds to a node in the DAG. Consider the following hypothetical sumcheck expression:

Here, the left-hand side is the input claim, and the right-hand side is a sum over the Boolean hypercube of a multivariate polynomial of degree 2, involving three multilinear terms , , and . The sumcheck protocol reduces this input claim to three output claims about , , and :

where consists of the random challenges obtained over the course of the sumcheck.

An output claim of one sumcheck might be the input claim of another sumcheck. Recall that by definition, the output claim for a virtual polynomial is necessarily the input claim of another sumcheck.

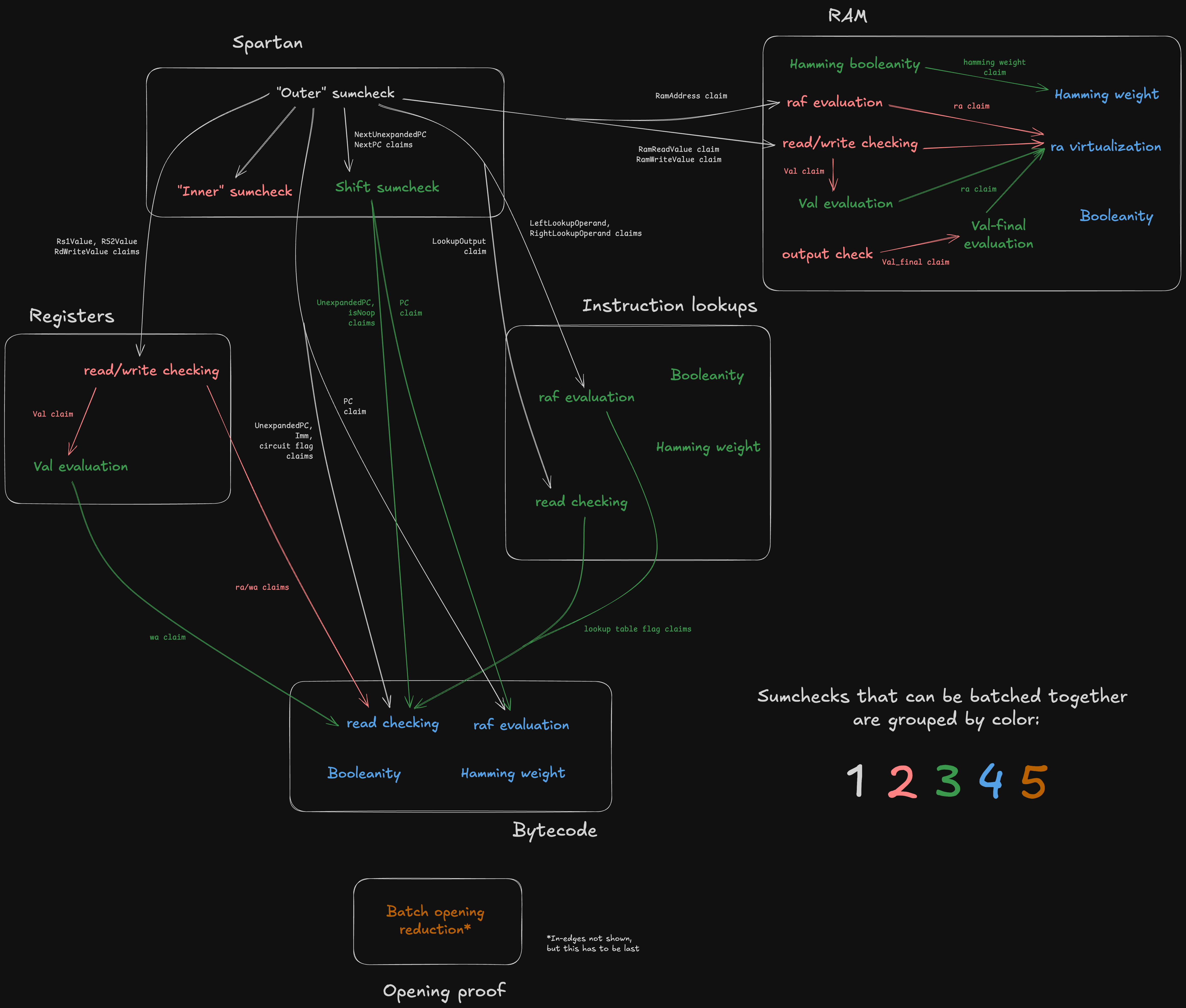

It follows that input claims can be viewed as in-edges and output claims as out-edges, thus defining the graph structure. This is what the Jolt sumcheck DAG looks like:

Note the white boxes corresponding to the five components of Jolt under the CPU paradigm –– plus two extra components ("R1CS input virtualization" and "One-hot checks") which don't have a CPU analogue.

In the diagaram above, sumchecks are color-coded based on "batch"; sumchecks in the same batch are "run in parallel" (see Batched sumcheck). Note that a given component may include sumchecks in different batches. For example, the sumchecks under "Registers" span stages 3, 4, 5, and 6.

The sumcheck stages are codified in zkvm/prover.rs (resp. zkvm/verifier.rs), which contain functions prove_stage1, prove_stage2 (resp. verify_stage1, verify_stage2) etc.

The function for a given stage declares which sumcheck instances the stage contains.

Observe that the DAG defines a partial ordering over the nodes (sumchecks), which consequently defines the minimum number of batches -- two sumchecks cannot be batched together if one "depends" on the other.

The terminal nodes in the graph –– namely, the "Inc claim reduction" and the Hamming weight + ra claim reduction" –– output claims that are directly proven using the PCS opening proof. For more details on the batched opening proof subprotocol, see Opening proof.

Managing state and data flow

The ProverOpeningAccumulator (resp. VerifierOpeningAccumulator) is responsible for managing the flow of opening claims –– the edges of the DAG.

The opening accumulator contains a mapping from OpeningId to claimed polynomial evaluation.

As sumchecks are proven, their output claims (for both virtual and committed polynomials) are inserted into the map. Later sumchecks can then consume the virtual polynomial openings as input claims.

While virtual polynomial claims are used internally and passed between sumchecks, committed polynomial claims are tracked because they must ultimately be verified via a batched Dory opening proof at the end of the protocol.

The input_claim and cache_openings methods on the SumcheckInstanceProver and SumcheckInstanceVerifier traits provide hooks to the respective accumulator objects.

input_claim effectively declares the in-edges for the sumcheck instance, while cache_openings effectively declares the out-edges.

RISC-V emulation

Jolt's RISC-V emulator is implemented within the tracer crate.

It originated as a fork of the riscv-rust project and has since evolved significantly to support Jolt’s unique requirements.

The bulk of the emulator's logic resides in the instruction subdirectory of the tracer crate, which defines the behavior of individual RISC-V instructions.

Core traits

Two key traits form the backbone of the RISC-V emulator:

-

RISCVInstruction- Defines core instruction behavior required by the tracer.

- Includes two associated constants,

MASKandMATCH, used for decoding instructions from raw bytes. - Has an associated type

Format, indicating the RISC-V instruction format (e.g., R-type or I-type). - The associated type

RAMAccessspecifies memory access (read/write) required by the instruction, guiding the tracer in capturing memory state before and after execution. - Its critical method,

execute, emulates instruction execution by modifying CPU state and populating memory state changes as needed.

-

RISCVTrace(extendsRISCVInstruction)- Adds a

tracemethod, which by default invokesexecuteand additionally captures pre- and post-execution states of any accessed registers or memory. May be overridden for instructions which employ virtual sequences, see below. - Constructs a

RISCVCyclestruct instance from the captured information and appends it to a trace vector.

- Adds a

Core enums

Instruction and Cycle are enums encapsulating all RV64IMAC instruction variants, wrapping implementations of RISCVInstruction and RISCVCycle, respectively.

Virtual Instructions and Sequences

A crucial concept in Jolt is that of virtual instructions and virtual sequences, introduced in section 6 of the Jolt paper. Virtual instructions don't exist in the official RISC-V ISA but are specifically created to facilitate proving.

Reasons for Using Virtual Instructions:

Complex operations (e.g. division)

Some instructions, like division, don't neatly adhere to the lookup table structure required by prefix-suffix Shout. To handle these cases, the problematic instruction is expanded into a virtual sequence, a series of instructions (some potentially virtual).

For instance, division involves a sequence described in detail in section 6.3 of the Jolt paper, utilizing virtual untrusted "advice" instructions. In the context of division, the advice instructions store the quotient and remainder in virtual registers, which are additional registers used exclusively within virtual sequences as scratch space. The rest of the division sequence verifies the correctness of the computed quotient and remainder, finally storing the quotient in the destination register specified by the original instruction.

Atomic operations (LR/SC)

The RISC-V "A" extension's Load-Reserved/Store-Conditional (LR/SC) instructions are implemented using virtual sequences with dedicated reservation registers. These are a pair of width-specific virtual registers that track the address reserved by the most recent LR instruction:

reservation_w(virtual register 32): Set byLR.W, checked bySC.Wreservation_d(virtual register 33): Set byLR.D, checked bySC.D

When LR.W executes, it writes the reservation address into reservation_w (vr32) and clears reservation_d (vr33). Conversely, LR.D writes into reservation_d (vr33) and clears reservation_w (vr32). This cross-clear mechanism prevents mixed-width LR/SC pairing: an SC.W after LR.D will always fail because the word-width reservation register was cleared by the LR.D.

SC failure path

SC.W and SC.D must handle both success and failure within the zkVM's constraint system. This is accomplished using VirtualAdvice:

- The prover supplies a success/failure bit via

VirtualAdvice, constrained to usingVirtualAssertLTE. - A derived

v_successflag () gates the reservation check: . On success (), this forces the reservation to match the target address. On failure (), the constraint is trivially satisfied. - The store is conditional: . On success this writes

rs2; on failure it writes back the original memory value (a no-op). - The destination register is set to 0 on success, 1 on failure.

Soundness: A malicious prover cannot claim success without a valid reservation, because the constraint would be violated. However, the prover can claim spurious failure even with a valid reservation, which is permitted by the RISC-V specification (SC is allowed to fail spuriously).

Per the RISC-V specification, SC always invalidates all reservations regardless of whether the store succeeded or failed. Both reservation_w and reservation_d are cleared to zero at the end of any SC instruction.

Performance optimization (inlines)

Virtual sequences can also be employed to optimize prover performance on specific operations, e.g. hash functions. For details, refer to Inlines.

Implementation details

Virtual instructions reside alongside regular instructions within the instructions subdirectory of the tracer crate.

Instructions employing virtual sequences implement the VirtualInstructionSequence trait, explicitly defining the sequence for emulation purposes.

The execute method executes the instruction as a single operation, while the trace method executes each instruction in the virtual sequence.

Performance considerations

A first-order approximation of Jolt's prover cost profile is "pay-per-cycle": each cycle in the trace costs roughly the same to prove, regardless of which instruction is being proven or whether the given cycle is part of a virtual sequence. This means that instructions that must be expanded to virtual sequences are more expensive than their unexpanded counterparts. An instruction emulated by an eight-instruction virtual sequence is approximately eight times more expensive to prove than a single, standard instruction.

On the other hand, virtual sequences can be used to improve prover performance on key operations (e.g. hash functions). This is discussed in the Inlines section.

R1CS constraints

Jolt employs Rank-1 Constraint System (R1CS) constraints to enforce the state transition function of its RISC-V virtual machine and to serve as a bridge between its various components. Jolt uses Spartan to prove its R1CS constraints.

Enforcing state transition

The R1CS constraints defined in constraints.rs include conditions ensuring that the program counter (PC) is updated correctly for each execution step.

These constraints capture:

-

The "normal" case, where the PC is incremented to point to the next instruction in the bytecode

-

Jump and branch instructions, where the PC may be updated according to some condition and offset

Linking sumcheck instances

Jolt leverages Twist and Shout instances, each comprising sumchecks.

Jolt's R1CS constraints provide essential "links" between these sumchecks, enforcing relationships between different polynomials.

For example, R1CS ensures consistency between independent components such as the RAM and register Twist instances: for instructions like LB or LW, an R1CS constraint ensures the value read from RAM matches the value written into the destination register (rd).

This is critical because RAM and registers operate as independent Twist memory checking instances, and we need to enforce this relationship between otherwise disjoint witnesses.

Arithmetic instructions

While Jolt's design uniquely leverages lookups for most RISC-V instructions, arithmetic instructions are the exception to this rule. Most arithmetic instructions (addition, subtraction, multiplication) are primarily constrained using R1CS constraints, with the lookup only serving to truncate potential overflow bits.

For these instructions, we can emulate the 64-bit arithmetic using native field arithmetic, since Jolt's elliptic curve scalar field is big enough to perform these operations without overflow.

E.g. to add two 64-bit numbers x and y, we can add their equivalents and , knowing that will be equivalent to the desired 65-bit sum.

We then employ a range-check lookup to truncate the potential overflow bit, thus matching the behavior of the RISC-V spec.

Circuit Flags

Circuit flags, also referred to as operation (op) flags, are boolean indicators associated with each instruction. These circuit flags are deterministically derived from the instruction's opcode and operands as they appear in the bytecode. For this reason, they are treated as "read values" (rv) in the context of the bytecode Shout instance.

Some examples of circuit flags:

-

Jump Flag: Indicates jump instructions (JAL, JALR).

-

Load Flag: Indicates load instructions (e.g. LB, LW).

-

AddOperands Flag: Indicates that the instruction operands should be added "in the field", as described above (e.g. ADD, ADDI, AUIPC).

These flags appear in the R1CS constraints. For example, the "load" flag enables the aforementioned RAM-to-register constraint specifically for load instructions.

Spartan

🚧 These docs are under construction 🚧

👷If you are urgently interested in this specific page, open a Github issue and we'll try to expedite it.👷

Registers

Jolt proves the correctness of register updates using the Twist memory checking algorithm, specifically utilizing the "local" prover algorithm.

In this Twist instance, because we have 32 RISC-V registers and 32 virtual registers. This is small enough that we can use .

Virtual register layout

The 32 virtual registers (registers 32--63) are partitioned into three regions:

| Register(s) | Name | Purpose |

|---|---|---|

| 32 | reservation_w | Reservation address for LR.W/SC.W |

| 33 | reservation_d | Reservation address for LR.D/SC.D |

| 34 | mtvec | Machine trap-vector base address (CSR 0x305) |

| 35 | mscratch | Machine scratch register (CSR 0x340) |

| 36 | mepc | Machine exception program counter (CSR 0x341) |

| 37 | mcause | Machine trap cause (CSR 0x342) |

| 38 | mtval | Machine trap value (CSR 0x343) |

| 39 | mstatus | Machine status (CSR 0x300) |

| 40--46 | (instruction) | Temporary registers for virtual sequences, allocated by allocate() |

| 47--63 | (inline) | Temporary registers for inline sequences, allocated by allocate_for_inline() |

Reserved registers (32--39) are persistent: they retain their values across instructions and are never handed out by the allocator. The two reservation registers are used by the LR/SC virtual sequences to track atomic reservations. The six CSR registers store M-mode control/status state for trap handling.

Instruction registers (40--46) are a pool of 7 temporary registers used by allocate() within virtual sequences (e.g., for division, SC). They are released back to the pool when the virtual sequence completes.

Inline registers (47--63) are used by allocate_for_inline() for inline sequences. All inline registers must be zeroed at the end of the inline sequence to ensure clean state.

Deviations from the Twist algorithm as described in the paper

Our implementation of the Twist prover algorithm differs from the description given in the Twist and Shout paper in a couple of ways. One such deviation is wv virtualization. Other, register-specific deviations are described below.

Two reads, one write per cycle

The Twist algorithm as described in the paper assumes one read and one write per cycle, with corresponding polynomials (read address) and (write address).

However, in the context of the RV64IMAC instruction set, a single instruction (specifically, an R-type instruction) can read from two source registers (rs1 and rs2) and write to a destination register (rd).

Thus, we have two polynomials corresponding to rs1 and rs2, plus a ) polynomial corresponding to rd.

Similarly, there are two polynomials and one polynomial.

As a result, we have two read-checking sumcheck instances and one write-checking sumcheck instance. In practice, all three are batched into a single sumcheck instance.

Why we don't need one-hot checks

Normally in Twist and Shout, polynomials like and need to be checked for one-hotness using the Booleanity and Hamming weight sumchecks.

In the context of registers, we do not need to perform these one-hot checks for , , and .

Observe that the registers are hardcoded in the program bytecode. Thus we can virtualize , , and using the bytecode read-checking sumcheck. Since the bytecode is known by the verifier and assumed to be "well-formed", the soundness of the read-checking sumcheck effectively ensures the one-hotness of , , and .

A (simplified) sumcheck expression to illustrate how this virtualization works:

where if the instruction at index in the bytecode has .

RAM

Jolt proves the correctness of RAM operations using the Twist memory checking algorithm, specifically utilizing the "local" prover algorithm.

Dynamic parameters

In Twist, the parameter determines the size of the memory. For RAM, unlike registers, is not known a priori and depends on the memory usage of the guest program. Consequently, the parameter , dictating how the memory address space is partitioned into chunks, must also be dynamically tuned. This ensures that no committed polynomial exceeds a maximum size defined by .

Jolt is currently configured so that if and otherwise. Using smaller gives better prover performance for shorter trace lengths.

Address remapping

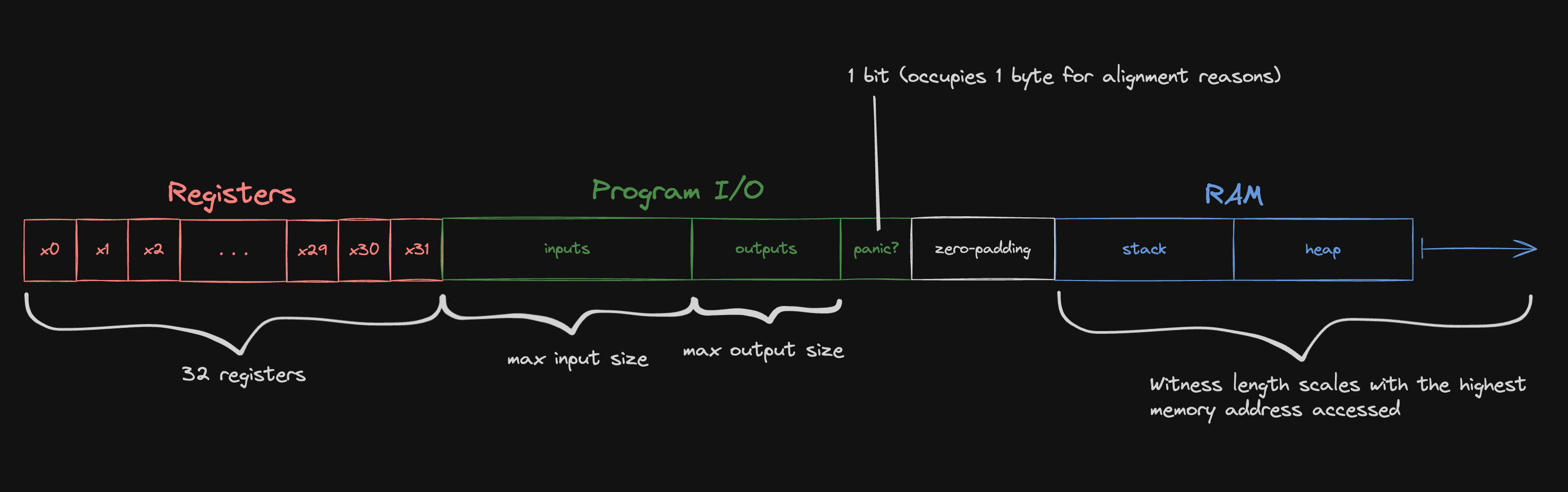

We treat each 8-byte-aligned doubleword in the guest memory as one "cell" for the purposes of memory checking.

Our RISC-V emulator is configured to use 0x80000000 as the DRAM start address -- the stack and heap occupy addresses above the start address, while Jolt reserves some memory below the start address for program inputs and outputs.

For the purposes of the memory checking argument, we remap the memory address to a witness index:

#![allow(unused)] fn main() { (address - memory_layout.lowest_address) / 8 }

where lowest_address is the left-most address depicted in the diagram above.

The division by eight reflects the fact that we treat guest memory as "doubleword-addressable" for the purposes of memory-checking.

Any load or store instructions that access less than a full doubleword (e.g. LB, SH, LW) are expanded into inline sequences that use the LD or SD instead.

Deviations from the Twist algorithm as described in the paper

Our implementation of the Twist prover algorithm differs from the description given in the Twist and Shout paper in a couple of ways. One such deviation is wv virtualization. Other, RAM-specific deviations are described below.

Single operation per cycle

The Twist algorithm as described in the paper assumes one read and one write per cycle, with corresponding polynomials (read address) and (write address). However, in the context of the RV64IMAC instruction set, only a single memory operation -- either a read or a write (or neither) -- is performed per cycle. Thus, a single polynomial (merging and ) suffices, simplifying and optimizing the algorithm. This polynomial is referred to as for the rest of this document.

No-op cycles

Many instructions do not access memory, so we represent them using a row of zeros in the polynomial rather than the one-hot encoding of the accessed address. Having more zeros in makes it cheaper to commit to and speeds up some of the other Twist sumchecks. This modification necessitates adjustments to the Hamming weight sumcheck, which would otherwise enforce that the Hamming weight for each "row" in is 1.

We introduce an additional Hamming Booleanity sumcheck:

where is the Hamming weight polynomial, which can be virtualized using the original Hamming weight sumcheck expression:

For simplicity, these equations are presented for the case, but as described above, for RAM is dynamic and can be greater than one.

ra virtualization

In Twist as described in the paper, a higher parameter would translate to higher sumcheck degree for the read checking, write checking, and evaluation sumchecks. Moreover, is fundamentally incompatible with the local prover algorithm for the read/write checking sumchecks.

To leverage the local algorithm while still supporting , Jolt simply carries out the read checking, write checking, and evaluation sumchecks as if , i.e. with a single (virtual) polynomial.

At the conclusion of these sumchecks, we are left with claims about the virtual polynomial. Since the polynomial is uncommitted, Jolt invokes a separate sumcheck that expresses an evaluation in terms of the constituent polynomials. The polynomials are, by definition, a tensor decomposition of , so the "ra virtualization" sumcheck is the following:

Advice Inputs

The evaluation sumcheck in Twist requires both the prover and verifier to know the initial state of the lookup table. When advice inputs are present in the RAM lookup table, the verifier doesn't have direct access to these values. To address this, the prover provides evaluations of the advice inputs and later proves their correctness against commitments held by the verifier.

For trusted advice, the commitment is generated externally; for untrusted advice, the prover generates the commitment. All advice inputs are placed at the lowest addresses of the RAM lookup table, with the larger advice type placed first to minimize field multiplications during verification.

We represent advice inputs and the RAM lookup table as multi-linear polynomials, assuming their sizes are powers of two.

Let:

- be the prover's RAM lookup table polynomial (including advice)

- be the verifier's RAM lookup table polynomial (zeros in advice section, otherwise identical to prover's)

- be the trusted advice polynomial

- be the untrusted advice polynomial

where (if this condition isn't met, we reorder the advice types to ensure the larger one comes first).

Let be the position of the single set bit in within its -bit representation. This position corresponds to bit when , or when .

The relationship between these polynomials is:

Since the verifier only needs to evaluate at a random point, it can compute this value efficiently using and the evaluations of the trusted and untrusted components.

Example

Consider a concrete example with:

- Total memory: 8192 elements ()

- Trusted advice: 1024 elements ()

- Untrusted advice: 128 elements ()

The memory layout would be:

- Addresses with the 3 MSBs as

000: trusted advice region (all combinations of the remaining 10 bits) - Addresses with the MSBs as

001000: untrusted advice region (all combinations of the remaining 7 LSBs)

This arrangement minimizes verifier computation. If we placed untrusted advice first, the verifier would need to check multiple bit patterns (000001, 000010, etc.) for the trusted section, resulting in significantly more field multiplications.

Output Check

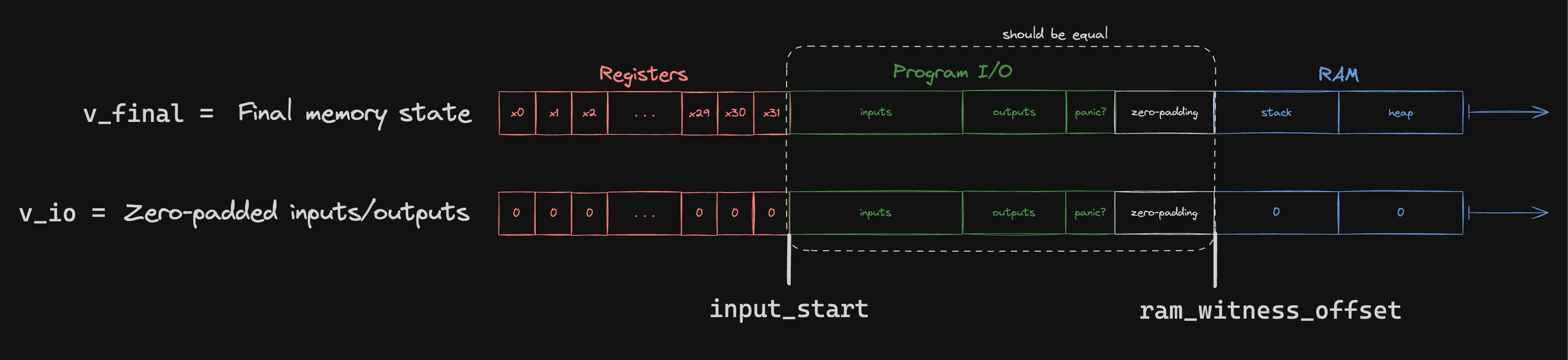

Jolt ensures correctness of guest program outputs via the output check sumcheck. Guest I/O operations, including outputs, inputs, and termination or panic bits, occur within a designated memory region. At execution completion, outputs and relevant status bits are written into this region.

Verifying that the claimed outputs and status bits are correct amounts to checking that the following polynomials agree at the indices corresponding to the program I/O memory region:

Note that val_io is only contains information known by the verifier.

To check that these two polynomials are equal, we use the following sumcheck:

where is a "range mask" polynomial, equal to 1 at all the indices corresponding to the program I/O region and 0 elsewhere. The MLE for a range mask polynomial that isolates the range can be written as follows:

It is implemented in code as RangeMaskPolynomial.

This output check sumcheck generates a claim about the final memory state polynomial (), which, being virtual, is proven using the evaluation sumcheck:

Intuitively, the delta between the final and initial state of some memory cell is the sum of all increments to that cell.

The verifier also requires evaluations of the advice sections to compute . As with the evaluation described above, the prover provides these evaluations. Since both sumchecks use the same randomness, the prover only needs to provide one evaluation proof per advice type. If different randomness were used, separate proofs would be required for each sumcheck.

You may have noticed in the above expression that if a polynomial only over cyclce variables, while the book describes . This change is explained in wv virtualization.

Both the and evaluation sumchecks use the virtual polynomial, with claims subsequently proven via ra virtualization sumcheck.

Instruction execution

One distinguishing feature of Jolt among zkVM architectures is how it handles instruction execution, i.e. proving the correct input/output behavior of every RISC-V instruction in the trace. This is primarily achieved through the Shout lookup argument.

Large Lookup Tables and Prefix-Suffix Sumcheck

The Shout instance for instruction execution must query a massive lookup table -- effectively of size , since the lookup query is constructed from two 64-bit operands. This parameter regime is discussed in the Twist and Shout paper (Section 7), which proposes the use of sparse-dense sumcheck. However, upon implementation it became clear that sparse-dense sumcheck did not generalize well to the full RISC-V instruction set.

Instead, Jolt introduces a new algorithm: prefix-suffix sumcheck, described in the appendix of Proving CPU Executions in Small Space. Like the sparse-dense algorithm, it requires some structure in the lookup table:

- The lookup table must have an MLE that is efficiently evaluable by the verifier. The

JoltLookupTabletrait encapsulates this MLE. - The lookup index can be split into a prefix and suffix, such that MLEs can be evaluated independently on the two parts and then recombined to obtain the desired lookup entry.

- Every prefix/suffix MLE is efficiently evaluable (constant time) on Boolean inputs

The prefix-suffix sumcheck algorithm can be conceptualized as a careful application of the distributive law to reduce the number of multiplications required for a sumcheck that would otherwise be intractably large.

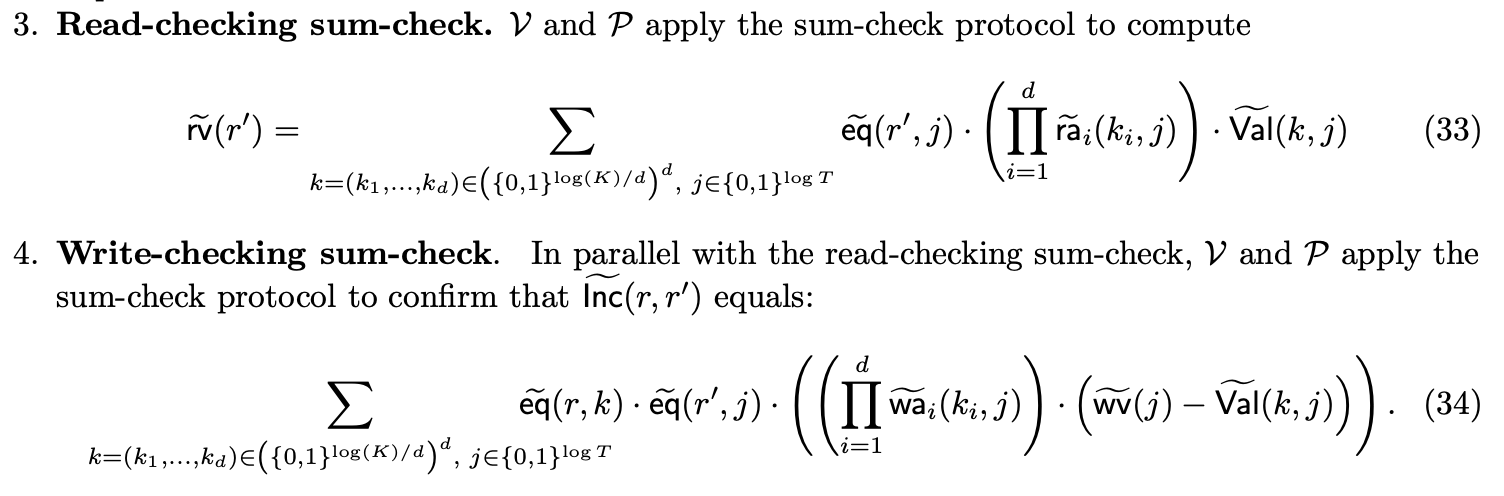

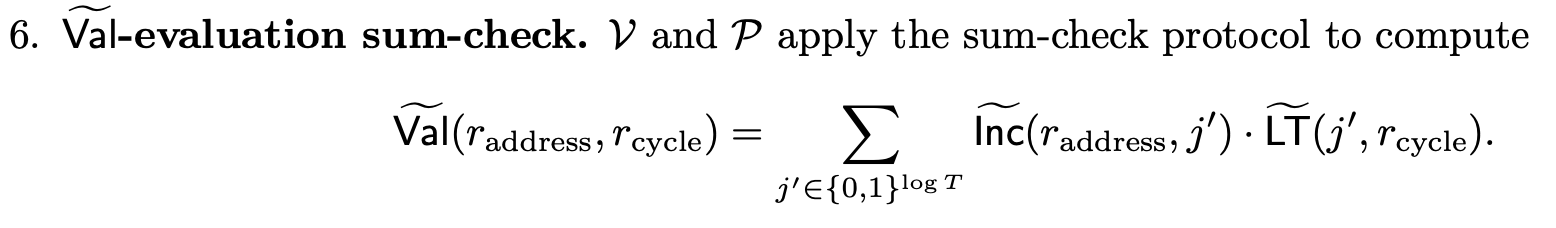

To unpack this, consider the read-checking sumcheck for Shout, as presented in the paper.

Naively, this would require multiplications, which is far too large given and . But suppose has prefix-suffix structure. The key intuition of prefix-suffix structure is captured by the following equation:

You can think of and as the high-order and low-order "bits" of , respectively, obtained by splitting at some partition index. The PrefixSuffixDecomposition trait specifies which prefix/suffix MLEs to evaluate and how to combine them.

We will split eight times at eight different indices, and these will induce the eight phases of the prefix-suffix sumcheck. Each of the eight phases encompasses 16 rounds of sumcheck (i.e. 16 of the address variables ), so together they comprise the first rounds of the read-checking sumcheck.

Given our prefix-suffix decomposition of , we can rewrite our read-checking sumcheck as follows:

Note that we have replaced with just . Since is degree 1 in each variable, we will treat it as a single multilinear polynomial while we're binding those variables (the first rounds). The equation as written above depicts the first phase, where is the first 16 variables of , and is the last 112 variables of .

Rearranging the terms in the sum, we have:

Note that the summand is degree 2 in , the variables being bound in the first phase:

- appears in

- also appears in appears in the paranthesized expression in

Written in this way, it becomes clear that we can treat the first 16 rounds as a mini-sumcheck over just the 16 variables, and with just multilinear terms. If we can efficiently compute the coefficients of each mulitlinear term, the rest of this mini-sumcheck is efficient. Each evaluation of can be computed in constant time, so that leaves the parenthesized term:

This can be computed in : since is one-hot, we can do a single iteration over and only compute the terms of the sum where . We compute a table of evaluation a priori, and can be evaluated in constant time on Boolean inputs.

After the first phase, the high-order 16 variables of will have been bound. We will need to use a new sumcheck expression for the next phase:

Now are random values that the first 16 variables were bound to, and are the next 16 variables of . Meanwhile, now represents the last 96 variables of .

This complicates things slightly, but the algorithm follows the same blueprint as in phase 1. This is a sumcheck over the 16 variables, and there are two multilinear terms. We can still compute each evaluation of in constant time, and we can still compute the parenthesized term in time (observe that there is exactly one non-zero coefficient of per cycle ).

After the first rounds of sumcheck, we are left with:

which we prove using the standard linear-time sumcheck algorithm. Note that here is a virtual polynomial.

Prefix and Suffix Implementations

Jolt modularizes prefix/suffix decomposition using two traits:

SparseDensePrefix(underprefixes/)SparseDenseSuffix(undersuffixes/)

Each prefix/suffix used in a lookup table implements these traits.

Multiplexing Between Instructions

An execution trace contains many different RISC-V instructions.

Note that there is a many-to-one relationship between instructions and lookup tables -- multiple instructions may share a lookup table (e.g., XOR and XORI).

To manage this, Jolt uses the InstructionLookupTable trait, whose lookup_table method returns an instruction's associated lookup table, if it has one (some instructions do not require a lookup).

Boolean lookup table flags indicate which table is active on a given cycle. At most one flag is set per cycle. These flags allow us to "multiplex" between all of the lookup tables:

where the sum is over all lookup tables .

Only one table's flag is 1 at any given cycle , so only that table's contributes to the sum.

This term becomes a drop-in replacement for as it appears in the Shout read-checking sumcheck:

Note that each here has prefix-suffix structure.

raf Evaluation

The -evaluation sumcheck in Jolt deviates from the description in the Twist/Shout paper. This is mostly an artifact of the prefix-suffix sumcheck, which imposes some required structure on the lookup index (i.e. the "address" variables ).

Case 1: Interleaved operands

Consider, for example, the lookup table for XOR x y.

Intuitively, the lookup index must be crafted from the bits of x and y.

A first attempt might be to simply concatenate the bits of x and y, i.e.:

Unfortunately, there is no apparent way for this formulation to satisfy prefix-suffix structure.

Instead we will interleave the bits of x and y, i.e.

With this formulation, the prefix-suffix structure is easily apparent. Suppose the prefix-suffix split index is 16, so:

Then XOR x y has the following prefix-suffix decomposition:

By inspection, effectively computes the 8-bit XOR of the high-order bits of x and y, while computes the 56-bit XOR of the low-order bits of x and y.

Then the full result XOR x y is obtained by concatenating (adding) the two results.

Now that we've confirmed that we have something with prefix-suffix structure, we can write down the -evaluation sumcheck expression.

Instead of a single polynomial, here we have two lookup operands x and y, which are called LeftLookupOperand and RightLookupOperand in code.

These are the values that appear in Jolt's R1CS constraints.

The point of the -evaluation sumcheck is to relate these (non-one-hot) polynomials to their one-hot counterparts.

In the context of instruction execution Shout, this means the polynomial.

Since we have two -like polynomials, we have two sumcheck instances:

This captures the interleaving behavior we described above: LeftLookupOperand is the concatenation of the "odd bits" of , while RightLookupOperand is the concatenation of the "even bits" of .

Case 2: Single operand

Many RISC-V instructions are similar to XOR, in that interleaving the operand bits lends itself to prefix-suffix structure.

However, there are some instructions where this is not the case.

The execution of some arithmetic instructions, for example, are handled by computing the corresponding operation in the field, and applying a range-check lookup to the result to truncate potential overflow bits.

In this case, the lookup index corresponds to the (single) value y being range-checked, so we do not interleave its bits with another operand.

Instead, we just have:

In this case, we have the following sumchecks:

LeftLookupOperand is 0 and RightLookupOperand will be the concatenation of all the bits of .

In order to handle Cases 1 and 2 simultaneously, we can use the same "multiplexing" technique as in the read-checking sumcheck. We use a flag polynomial to indicate which case we're in:

where , , and are circuit flags.

Prefix-suffix structure

These sumchecks have similar structure to the read-checking sumcheck. A side-by-side comparison of the read-checking sumcheck with the sumchecks for Case 1:

As it turns out, and have prefix-suffix structure, so we can also use the prefix-suffix algorithm for these sumchecks. Due to their similarities, we batch the read-checking and these -evaluation sumchecks together in a bespoke fashion.

Other sumchecks

ra virtualization

The lookup tables used for instruction execution are of size , so we set as our decomposition parameter such that .

Similar to the RAM Twist instance, we opt to virtualize the polynomial. In other words, Jolt simply carries out the read checking and evaluation sumchecks as if .

At the conclusion of these sumchecks, we are left with claims about the virtual polynomial. Since the polynomial is uncommitted, Jolt invokes a separate sumcheck that expresses an evaluation in terms of the constituent polynomials. The polynomials are, by definition, a tensor decomposition of , so the " virtualization" sumcheck is the following:

Since the degree of this sumcheck is , using the standard linear-time sumcheck prover algorithm would make this sumcheck relatively slow. However, we employ optimizations specific to high-degree sumchecks, adapting techniques from the Karatsuba and Toom-Cook multiplication algorithms.

One-hot checks

Jolt enforces that the polynomials used for instruction execution are one-hot, using a Booleanity and Hamming weight sumcheck as described in the paper. These implementations follow the Twist and Shout paper closely, with no notable deviations.

Bytecode

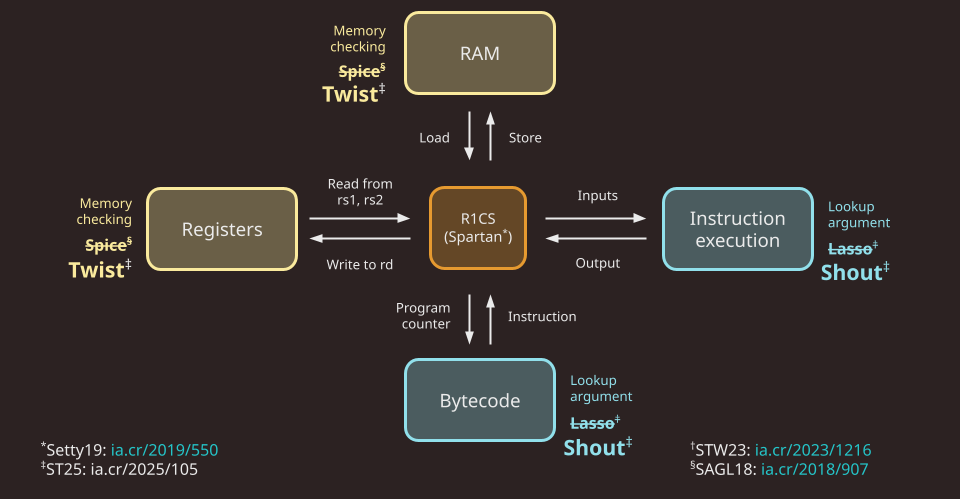

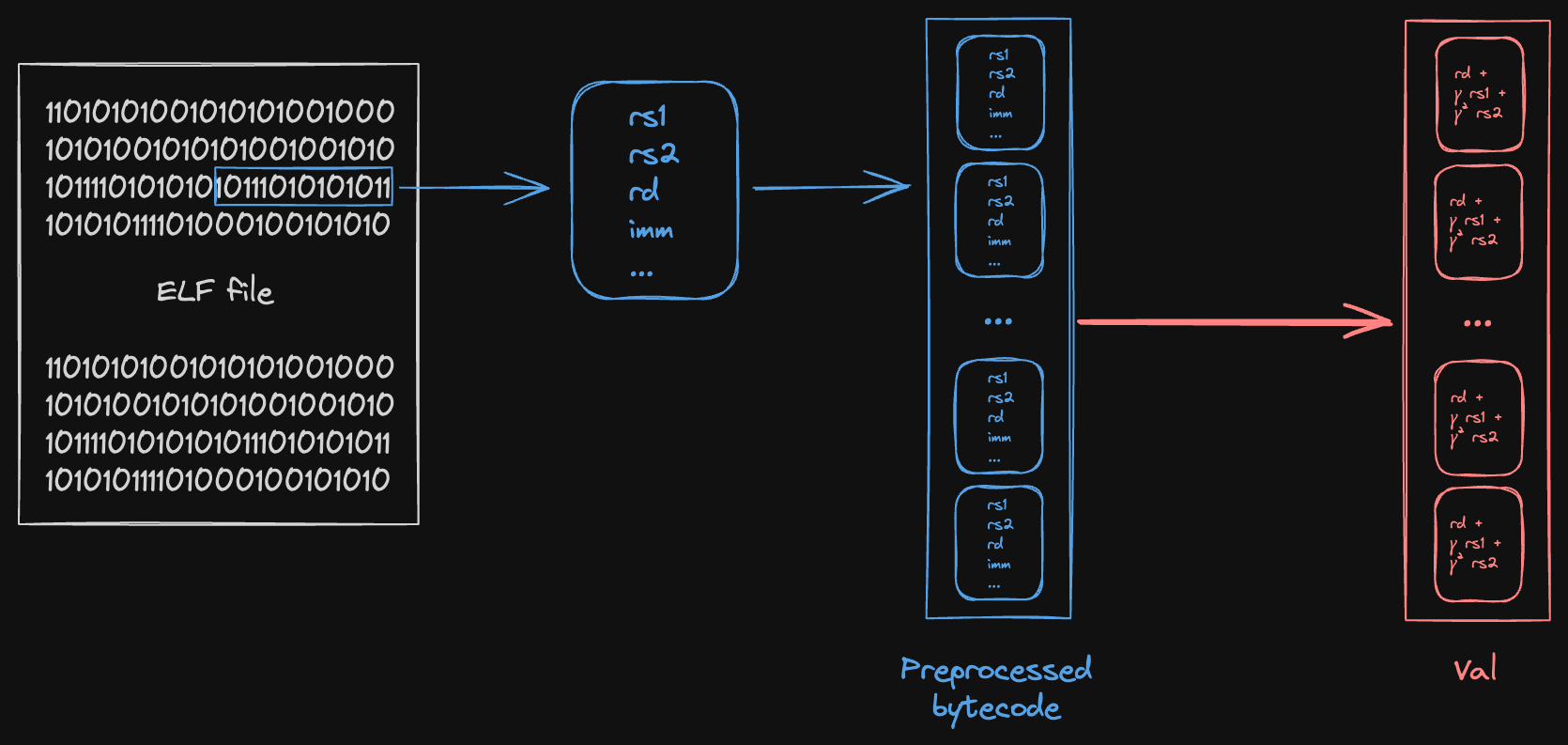

At each cycle of the RISC-V virtual machine, the current instruction (as indicated by the program counter) is "fetched" from the bytecode and decoded. In Jolt, this is proven by treating the bytecode as a lookup table, and fetches as lookups. To prove the correctness of these lookups, we use the Shout lookup argument.

One distinguishing feature of the bytecode Shout instance is that we have multiple instances of the read-checking and -evaluation sumchecks. Intuitively, the bytecode serves as the "ground truth" of what's being executed, so one would expect many virtual polynomial claims to eventually lead back to the bytecode. And this holds in practice –– in the Jolt sumcheck DAG diagram, we see that there are five stages of read-checking claims pointing to the bytecode read-checking node, and two evaluation claims folded into stages 1 and 3.

Each stage has its own unique opening point, so having in-edges of different colors implies that multiple instances of that sumcheck must be run in parallel to prove the different claims.

Read-checking

Another distinguishing feature of the bytecode Shout instance is that we treat each entry of lookup table as containing a tuple of values, rather than a single value. Intuitively, this is because each instruction in the bytecode encodes multiple pieces of information: opcode, operands, etc.

The figure below loosely depicts the relationship between bytecode and polynomial.

We start from some ELF file, compiled from the guest program. For each instruction in the ELF (raw bytes), we decode/preprocess the instruction into a structured format containing the individual witness values used in Jolt:

Then, we compute a Reed-Solomon fingerprint of some subset of the values in the tuple, depending on what claims are being proven. These fingerprints serve as the coefficients of the polynomial for that read-checking instance.

-evaluation

-evaluation claims for the program counter are folded into the multi-stage read-checking sumcheck rather than being handled separately. There are two claims:

- raf claim 1: From the Spartan "outer" sumcheck, folded into Stage 1 with weight

- raf claim 3: From the Spartan "shift" sumcheck, folded into Stage 3 with weight

The polynomial in the context of bytecode is the expanded program counter (), which maps each cycle to the bytecode index being executed. This is distinct from , which is the ELF/memory address.

Batching read-checking and -evaluation together

The bytecode read-checking sumcheck combines claims from five different sumcheck stages into a single batched sumcheck, using two levels of random linear combinations (RLCs):

- Stage-level RLC (using ): Combines the five stages together

- Per-stage RLC (using ): Combines multiple claims within each stage

The five stages are:

- Stage 1: Spartan outer sumcheck claims (program counter, immediate values, circuit flags)

- Stage 2: Product virtualization claims (jump/branch flags, register write flags)

- Stage 3: Shift sumcheck claims (instruction operand flags, virtual instruction metadata)

- Stage 4: Register read-write checking claims (register addresses)

- Stage 5: Register value evaluation and instruction lookup claims (register addresses, lookup table flags)

Additionally, bytecode claims for the program counter are folded into stages 1 and 3.

Combined Sumcheck Expression

The overall sumcheck proves the following identity:

where:

- ranges over bytecode indices (address space)

- ranges over cycle indices (time dimension)

- is the read access polynomial indicating cycle accesses bytecode row

- is the stage-specific value polynomial encoding instruction data

- is the identity polynomial that converts a bytecode index from binary form to the corresponding field element (used for claims)

- is the stage-folding challenge

- challenges are used within each to combine multiple sub-claims

Note that the claims treat the expanded program counter as , not UnexpandedPC.

Stage Polynomials

Each of the five claims has a corresponding polynomial that encodes different instruction properties. Using per-stage challenges , these are defined as:

Stage 1 (Spartan outer sumcheck):

Stage 2 (Product virtualization):

Stage 3 (Shift sumcheck):

Stage 4 (Register read-write checking):

Stage 5 (Register value evaluation and instruction lookups):

where:

- is the instruction's ELF/memory address (not the bytecode index )

- equals 1 if instruction has destination register , and 0 otherwise (similarly for and )

- Various boolean flags indicate instruction properties

Instruction address

Each instruction in the bytecode has two associated "addresses":

- Bytecode index : its index in the expanded bytecode. "Expanded" bytecode refers to the preprocessed bytecode, after instructions are expanded to their virtual sequences. This is what the polynomial uses to indicate which bytecode row is accessed.

- ELF/memory address : its memory address as given by the ELF. All the instructions in a virtual sequence are assigned the address of the "real" instruction they were expanded from. This is stored as part of the instruction data in bytecode row .

The bytecode index is used for addressing within the sumcheck (the variable in the double sum). The ELF address is used to enforce program counter updates in the R1CS constraints, and is treated as a part of the tuple of values in the preprocessed bytecode.

The "outer" and shift sumchecks in Spartan output claims about the virtual UnexpandedPC polynomial, which corresponds to the ELF address. These claims are proven using bytecode read-checking (specifically, they appear in the Stage 1 and Stage 3 value polynomials).

Flags

There are two types of Boolean flags used in Jolt:

- Circuit flags, used in R1CS constraints